Containers Technology

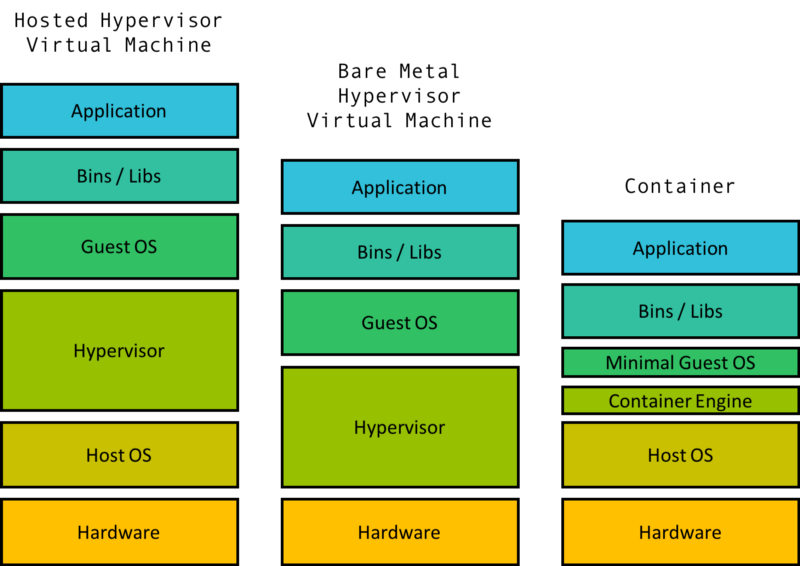

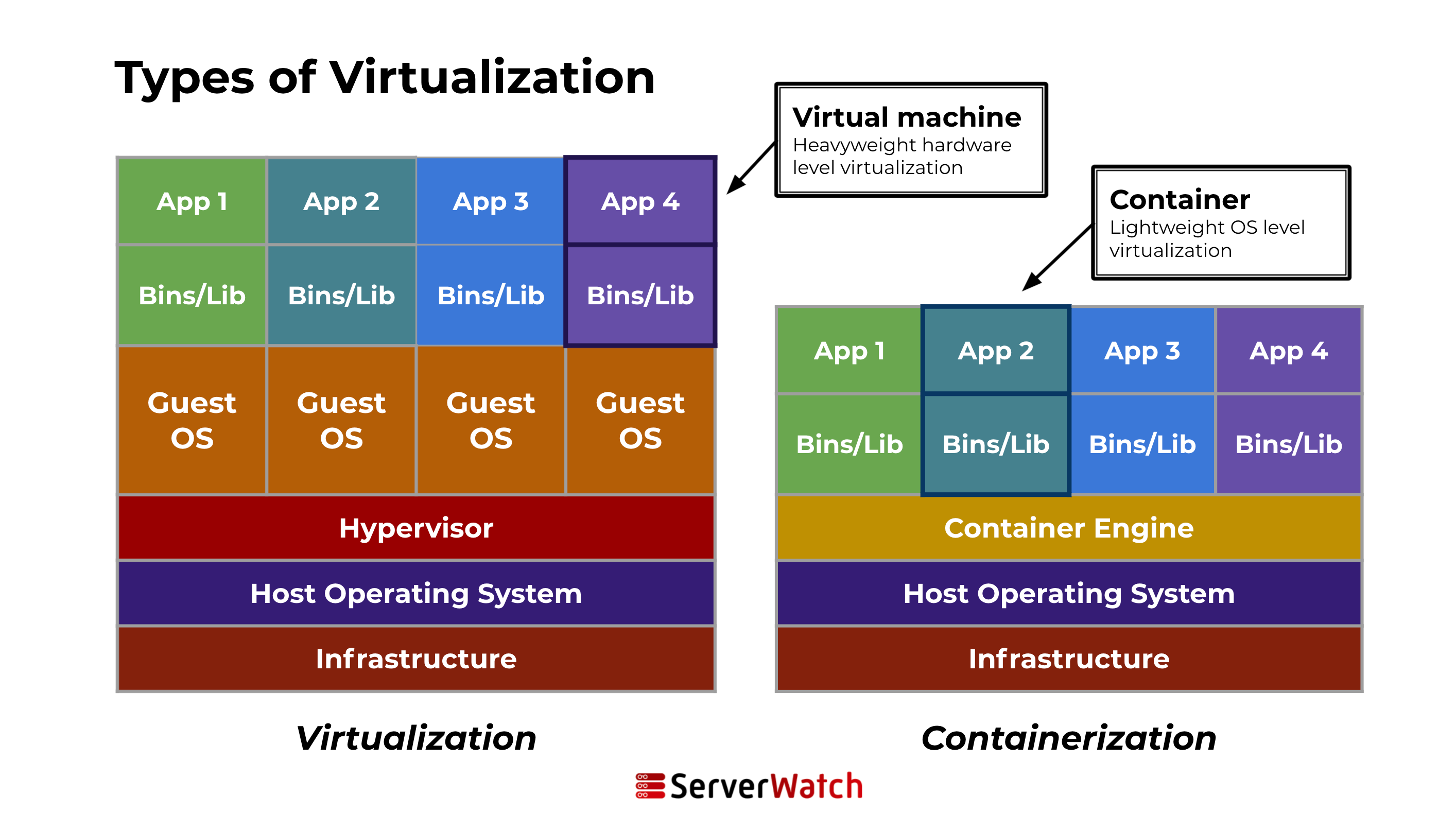

A container is a lightweight, standalone, executable package of software that includes everything needed to run a piece of software: code, runtime, system tools, system libraries, and settings. This technology is part of a broader trend known as containerization, a method of packaging software so it can be run with its dependencies isolated from other processes.

Containers allow developers to package and run applications in isolated environments, a process known as containerization. This technology provides a consistent and efficient means of deploying software across different environments, from a developer’s local workstation to production servers, without worrying about differences in OS configurations and underlying infrastructure.

Unlike traditional deployment methods, containers encapsulate an application and its dependencies in a container image. This image includes everything the application needs to run: code, runtime, libraries, and system tools. Because containers share the host system’s kernel (but maintain their own filesystem, CPU, memory, and process space), they are much lighter and more resource-efficient than virtual machines.

Key components of a container

Several key components make up a container:

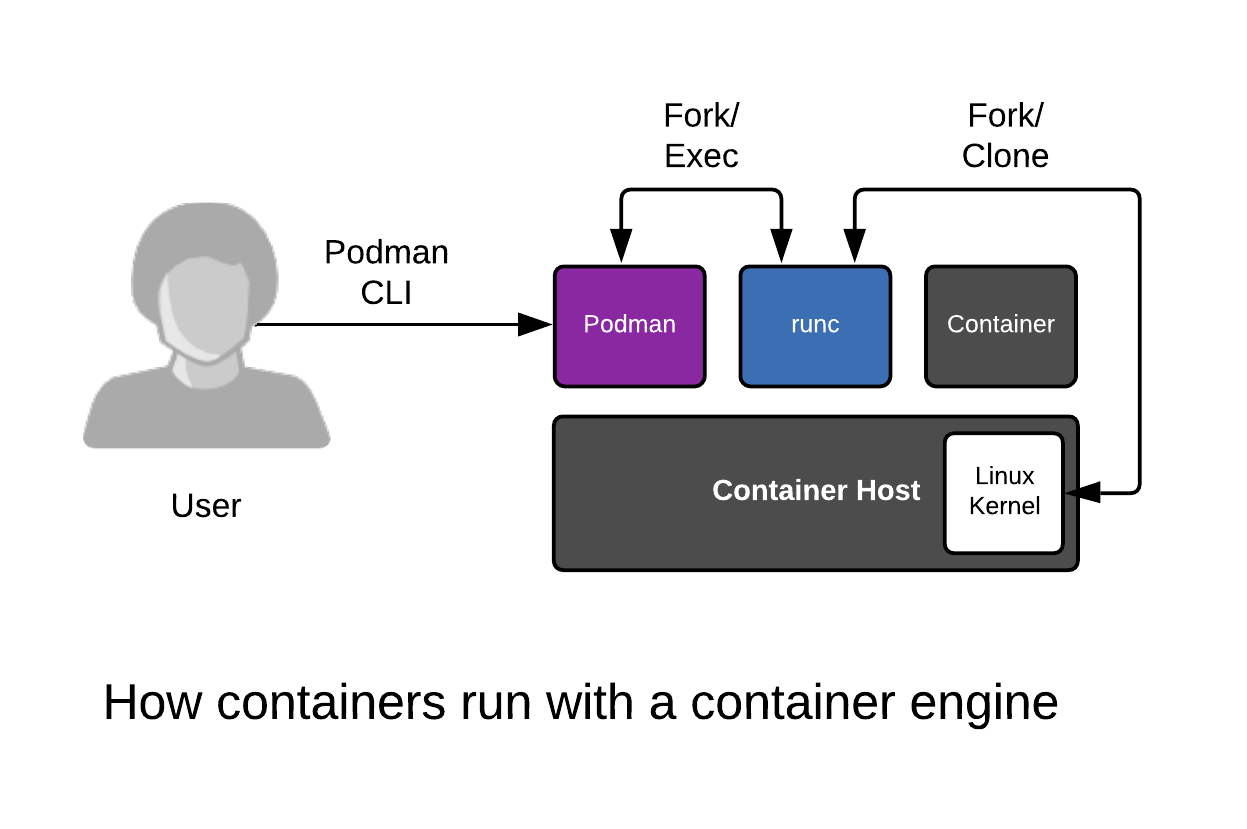

1 Container engine: This is the core software that provides a runtime environment for containers. Examples include Docker and rkt. The engine creates, runs, and manages the lifecycle of containers.

2 Container image: This is a static file that includes all the components needed to run an application — code, runtime, system tools, libraries, and settings.

3 Registry: This is a storage and content delivery system, holding container images. Users can pull images from a registry to deploy containers.

4 Orchestration tools: These are tools for managing multiple containers. They help automate the deployment, scaling, and operations of containerized applications. Kubernetes is a prime example of an orchestration tool.

5 Namespaces and cgroups: These Linux features are used to isolate containers. Namespaces ensure that each container has its own isolated workspace (including file system, network stack, etc.), and cgroups manage resource allocation (CPU, memory, disk I/O, etc.) to each container.

6 Docker Hub: A cloud-based registry service for sharing and managing container images. https://hub.docker.com/

7 Docker Compose: A tool for defining and running multi-container Docker applications.

Vagrant Template

Vagrantfile for Vagrant https://developer.hashicorp.com/vagrant/docs/vagrantfile

- Centos 9 Stream

generic/centos9s

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.box = "generic/centos9s"

config.vm.network "forwarded_port", guest: 80, host: 8080

config.vm.network "private_network", ip: "192.168.33.10"

# config.vm.network "public_network"

# config.vm.synced_folder "../data", "/vagrant_data"

#config.vm.synced_folder ".", "/vagrant"

config.vm.provider "virtualbox" do |vb|

vb.memory = "2048"

vb.cpus = 2

end

config.vm.provision "shell", inline: <<-SHELL

SHELL

end

- We can change template to use box

generic/ubuntu2310, below:

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.box = "generic/ubuntu2310"

config.vm.network "forwarded_port", guest: 80, host: 8080

config.vm.network "private_network", ip: "192.168.33.10"

# config.vm.network "public_network"

# config.vm.synced_folder "../data", "/vagrant_data"

#config.vm.synced_folder ".", "/vagrant"

config.vm.provider "virtualbox" do |vb|

vb.memory = "2048"

vb.cpus = 2

end

config.vm.provision "shell", inline: <<-SHELL

SHELL

end

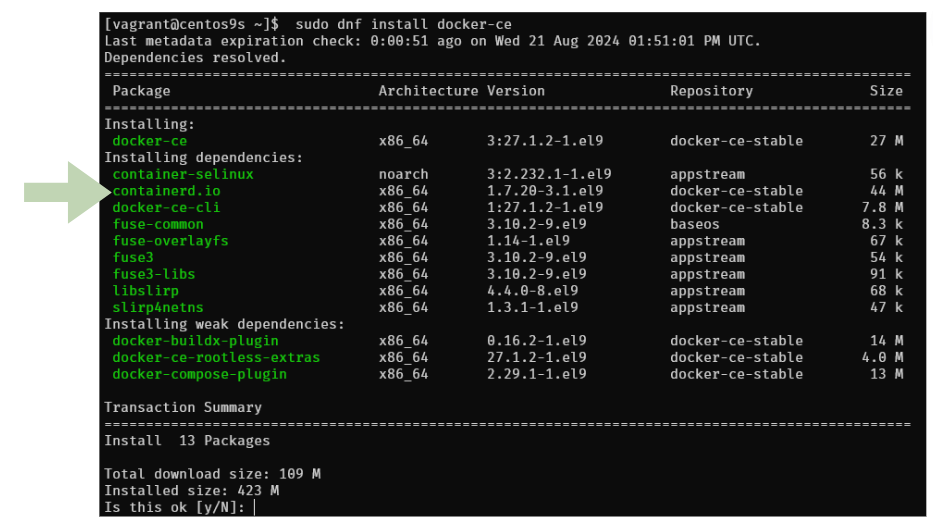

Install Docker on CentOs 9 Stream

Docker simplifies the process of managing application processes in containers, which are isolated from each other and the host system. This isolation improves the security and efficiency of deploying applications.

Step1 Add Docker Repository

- install docker latest version from docker inc

$ sudo dnf install -y yum-utils device-mapper-persistent-data lvm2

$ sudo dnf config-manager --add-repo=https://download.docker.com/linux/centos/docker-ce.repo

$ sudo dnf repolist -v

Step2 Install

- Install Docker CE

$ sudo dnf install docker-ce

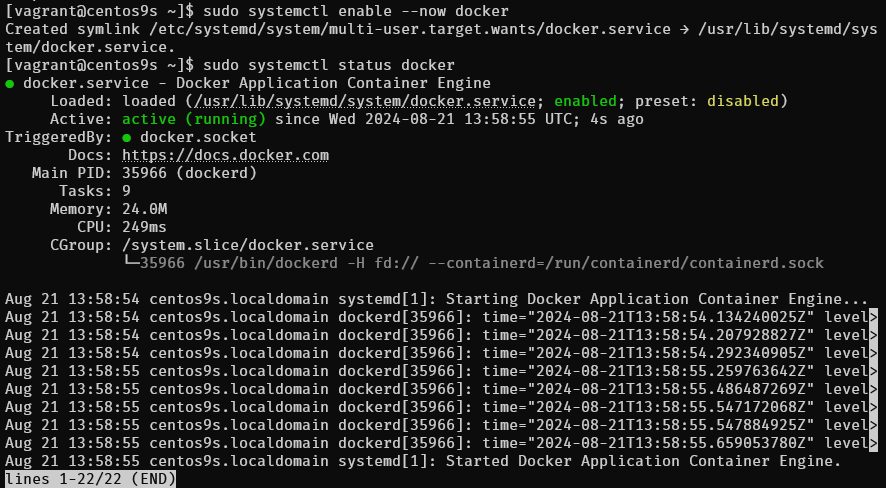

Step3 Enable and Start Docker Service

$ sudo systemctl enable --now docker

$ sudo systemctl status docker

Step3 Check info

- Check info

sudo docker info

$ sudo docker version

$ sudo docker info

Client: Docker Engine - Community

Version: 27.1.2

Context: default

Debug Mode: false

Plugins:

buildx: Docker Buildx (Docker Inc.)

Version: v0.16.2

Path: /usr/libexec/docker/cli-plugins/docker-buildx

compose: Docker Compose (Docker Inc.)

Version: v2.29.1

Path: /usr/libexec/docker/cli-plugins/docker-compose

Server:

Containers: 0

Running: 0

Paused: 0

Stopped: 0

Images: 0

Server Version: 27.1.2

Storage Driver: overlay2

Backing Filesystem: xfs

Supports d_type: true

Using metacopy: false

Native Overlay Diff: true

userxattr: false

Logging Driver: json-file

Cgroup Driver: systemd

Cgroup Version: 2

Plugins:

Volume: local

Network: bridge host ipvlan macvlan null overlay

Log: awslogs fluentd gcplogs gelf journald json-file local splunk syslog

Swarm: inactive

Runtimes: io.containerd.runc.v2 runc

Default Runtime: runc

Init Binary: docker-init

containerd version: 8fc6bcff51318944179630522a095cc9dbf9f353

runc version: v1.1.13-0-g58aa920

init version: de40ad0

Security Options:

seccomp

Profile: builtin

cgroupns

Kernel Version: 5.14.0-391.el9.x86_64

Operating System: CentOS Stream 9

OSType: linux

Architecture: x86_64

CPUs: 2

Total Memory: 1.734GiB

Name: centos9s.localdomain

ID: 25e199fb-abb2-4ef6-9ceb-f843d3c50b8c

Docker Root Dir: /var/lib/docker

Debug Mode: false

Experimental: false

Insecure Registries:

127.0.0.0/8

Live Restore Enabled: false

Step4 Manage Docker as Non-root user

- By default, running Docker requires root privileges. However, you can add your user to the Docker group to manage Docker as a non-root user.

$ sudo usermod -aG docker $(whoami)

$ newgrp docker

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

user

newgrp dockeror logout and re login again

- Check file system of docker and containerd

[vagrant@centos9s ~]$ sudo ls -l /var/lib/docker/

total 12

drwx--x--x. 4 root root 138 Aug 21 13:58 buildkit

drwx--x---. 5 root root 4096 Aug 21 14:15 containers

-rw-------. 1 root root 36 Aug 21 13:58 engine-id

drwx------. 3 root root 22 Aug 21 13:58 image

drwxr-x---. 3 root root 19 Aug 21 13:58 network

drwx--x---. 11 root root 4096 Aug 21 14:15 overlay2

drwx------. 4 root root 32 Aug 21 13:58 plugins

drwx------. 2 root root 6 Aug 21 13:58 runtimes

drwx------. 2 root root 6 Aug 21 13:58 swarm

drwx------. 2 root root 6 Aug 21 14:12 tmp

drwx-----x. 2 root root 50 Aug 21 13:58 volumes

[vagrant@centos9s ~]$ sudo ls -l /var/lib/containerd/

total 0

drwxr-xr-x. 4 root root 33 Aug 21 14:06 io.containerd.content.v1.content

drwx--x--x. 2 root root 21 Aug 21 13:58 io.containerd.metadata.v1.bolt

drwx--x--x. 2 root root 6 Aug 21 13:58 io.containerd.runtime.v1.linux

drwx--x--x. 3 root root 18 Aug 21 14:06 io.containerd.runtime.v2.task

drwx------. 2 root root 6 Aug 21 13:58 io.containerd.snapshotter.v1.blockfile

drwx------. 3 root root 23 Aug 21 13:58 io.containerd.snapshotter.v1.native

drwx------. 3 root root 23 Aug 21 13:58 io.containerd.snapshotter.v1.overlayfs

drwx------. 2 root root 6 Aug 21 13:58 tmpmounts

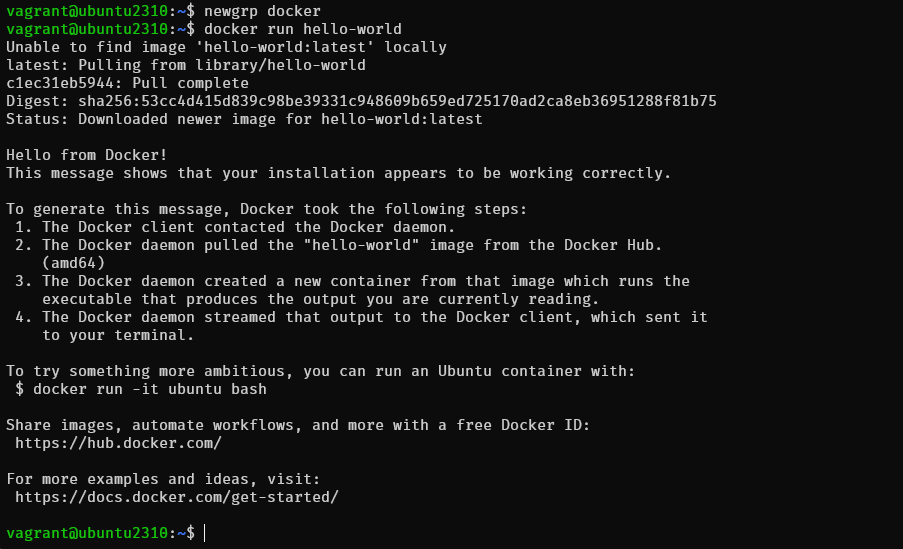

Step5 Run Test Docker Container

- Test with image

hello-world

$ docker run hello-world

Unable to find image 'hello-world:latest' locally

latest: Pulling from library/hello-world

c1ec31eb5944: Pull complete

Digest: sha256:53cc4d415d839c98be39331c948609b659ed725170ad2ca8eb36951288f81b75

Status: Downloaded newer image for hello-world:latest

Hello from Docker!

This message shows that your installation appears to be working correctly.

To generate this message, Docker took the following steps:

1. The Docker client contacted the Docker daemon.

2. The Docker daemon pulled the "hello-world" image from the Docker Hub.

(amd64)

3. The Docker daemon created a new container from that image which runs the

executable that produces the output you are currently reading.

4. The Docker daemon streamed that output to the Docker client, which sent it

to your terminal.

To try something more ambitious, you can run an Ubuntu container with:

$ docker run -it ubuntu bash

Share images, automate workflows, and more with a free Docker ID:

https://hub.docker.com/

For more examples and ideas, visit:

https://docs.docker.com/get-started/

Step6 Check image

- Check image

$ docker image ls

REPOSITORY TAG IMAGE ID CREATED SIZE

hello-world latest d2c94e258dcb 15 months ago 13.3kB

Step 7 Download image

- download image of centos 9 stream from quay.io repository

$ docker pull quay.io/centos/centos:stream9

stream9: Pulling from centos/centos

26ef76492da3: Pull complete

Digest: sha256:a0017fa930fbbbb706509aafdb287b16d9d3d1672f09712a04ea634fea68a85d

Status: Downloaded newer image for quay.io/centos/centos:stream9

quay.io/centos/centos:stream9

- Run echo command inside container, then container will stop

$ docker run quay.io/centos/centos:stream9 /bin/echo "Welcome to the Docker World!"

Welcome to the Docker World!

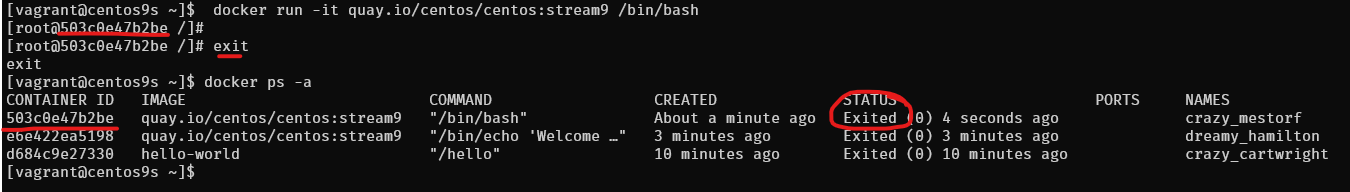

Step 8 Run container with -it option to connect to interactive session

$ docker run -it quay.io/centos/centos:stream9 /bin/bash

[root@503c0e47b2be /]# uname -a

[root@503c0e47b2be /]# exit

- after

exityou will return back to origin shell and container will stop - Check container

$ docker ps -a

Note: you can exit container without stop container by use

Ctrl+p, Ctrl+q

Step 8 Install docker compose

Docker Compose is a tool for defining and running multi-container applications. It is the key to unlocking a streamlined and efficient development and deployment experience.

Compose simplifies the control of your entire application stack, making it easy to manage services, networks, and volumes in a single, comprehensible YAML configuration file. Then, with a single command, you create and start all the services from your configuration file.

Compose works in all environments; production, staging, development, testing, as well as CI workflows. It also has commands for managing the whole lifecycle of your application:

$ sudo dnf install docker-compose-plugin

- Check version docker compose

$ docker compose version

Docker Compose version v2.29.1

Step 8 Configure an application that has web and DB services with Docker Compose

$ mkdir web_db

$ cd web_db

$ vim Dockerfile

- add content of docker file from below

FROM quay.io/centos/centos:stream9

MAINTAINER ServerWorld <admin@srv.world>

RUN dnf -y install nginx

EXPOSE 80

CMD ["/usr/sbin/nginx", "-g", "daemon off;"]

- define application configuration

$ vim docker-compose.yml

- add content of docker compose

services:

db:

image: mariadb

volumes:

- /var/lib/docker/disk01:/var/lib/mysql

environment:

MYSQL_ROOT_PASSWORD: password

MYSQL_USER: cent

MYSQL_PASSWORD: password

MYSQL_DATABASE: cent_db

ports:

- "3306:3306"

web:

build: .

ports:

- "80:80"

volumes:

- /var/lib/docker/disk02:/usr/share/nginx/html

- Build and run docker compose

$ docker compose up -d

- Result of command

$ docker compose up -d

[+] Running 9/9

✔ db Pulled 20.5s

✔ 31e907dcc94a Pull complete 4.8s

✔ 8687fa065e6d Pull complete 4.9s

✔ bc75b4546118 Pull complete 5.5s

✔ 90824338d93e Pull complete 5.5s

✔ c13aedba8d5d Pull complete 5.6s

✔ ad9066662cff Pull complete 15.9s

✔ 537f82e52967 Pull complete 16.0s

✔ a5e6bca88fae Pull complete 16.0s

[+] Building 52.5s (7/7) FINISHED docker:default

=> [web internal] load build definition from Dockerfile 0.1s

=> => transferring dockerfile: 256B 0.0s

=> WARN: MaintainerDeprecated: Maintainer instruction is deprecated in favor of using label ( 0.1s

=> [web internal] load metadata for quay.io/centos/centos:stream9 0.0s

=> [web internal] load .dockerignore 0.0s

=> => transferring context: 2B 0.0s

=> [web 1/2] FROM quay.io/centos/centos:stream9 0.0s

=> [web 2/2] RUN dnf -y install nginx 51.4s

=> [web] exporting to image 0.8s

=> => exporting layers 0.8s

=> => writing image sha256:2054d035639c4bc56c2dcb6a4c34351f8a6a18e08d53c759af55a977ad217341 0.0s

=> => naming to docker.io/library/web_db-web 0.0s

=> [web] resolving provenance for metadata file 0.0s

[+] Running 3/3

✔ Network web_db_default Created 0.6s

✔ Container web_db-web-1 Started 0.6s

✔ Container web_db-db-1 Started 0.6s

- Confirm running container

$ docker ps

CONTAINER ID IMAGE COMMAND CREATED STATUS PORTS NAMES

1cc94e9479fd mariadb "docker-entrypoint.s…" About a minute ago Up About a minute 0.0.0.0:3306->3306/tcp web_db-db-1

5d95646a1f62 web_db-web "/usr/sbin/nginx -g …" About a minute ago Up About a minute 0.0.0.0:80->80/tcp web_db-web-1

- Test Verify access to database

$ sudo dnf install mysql

$ mysql -h 127.0.0.1 -u root -p -e "show variables like 'hostname';"

Enter password:

+---------------+--------------+

| Variable_name | Value |

+---------------+--------------+

| hostname | 1cc94e9479fd |

+---------------+--------------+

$ mysql -h 127.0.0.1 -u cent -p -e "show databases;"

Enter password:

+--------------------+

| Database |

+--------------------+

| cent_db |

| information_schema |

+--------------------+

- Add index.html content

$ sudo su -

# echo "Hello Docker Compose World" > /var/lib/docker/disk02/index.html

# curl 127.0.0.1

Hello Docker Compose World

# exit

Docker compose command

- check container process

$ docker compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

web_db-db-1 mariadb "docker-entrypoint.s…" db 7 minutes ago Up 7 minutes 0.0.0.0:3306->3306/tcp

web_db-web-1 web_db-web "/usr/sbin/nginx -g …" web 7 minutes ago Up 7 minutes 0.0.0.0:80->80/tcp

- Access to services (container) Servers list db and web.

$ docker compose exec db /bin/bash

root@1cc94e9479fd:/# exit

$ docker compose exec web /bin/bash

[root@5d95646a1f62 /]# exit

- Stop container

$ docker compose stop

[+] Stopping 2/2

✔ Container web_db-db-1 Stopped 0.4s

✔ Container web_db-web-1 Stopped

- Start up a service only web

$ docker compose up -d web

[+] Running 1/1

✔ Container web_db-web-1 Started

0.3s

$ docker compose ps

NAME IMAGE COMMAND SERVICE CREATED STATUS PORTS

web_db-web-1 web_db-web "/usr/sbin/nginx -g …" web 13 minutes ago Up 6 seconds 0.0.0.0:80->80/tcp

- Delete container

$ docker compose down

[+] Running 3/3

✔ Container web_db-web-1 Removed 0.0s

✔ Container web_db-db-1 Removed 0.0s

✔ Network web_db_default Removed 0.2s

Install Docker on Ubuntu 2310

$ cat /etc/os-release

PRETTY_NAME="Ubuntu 23.10"

NAME="Ubuntu"

VERSION_ID="23.10"

VERSION="23.10 (Mantic Minotaur)"

VERSION_CODENAME=mantic

ID=ubuntu

ID_LIKE=debian

HOME_URL="https://www.ubuntu.com/"

SUPPORT_URL="https://help.ubuntu.com/"

BUG_REPORT_URL="https://bugs.launchpad.net/ubuntu/"

PRIVACY_POLICY_URL="https://www.ubuntu.com/legal/terms-and-policies/privacy-policy"

UBUNTU_CODENAME=mantic

LOGO=ubuntu-logo

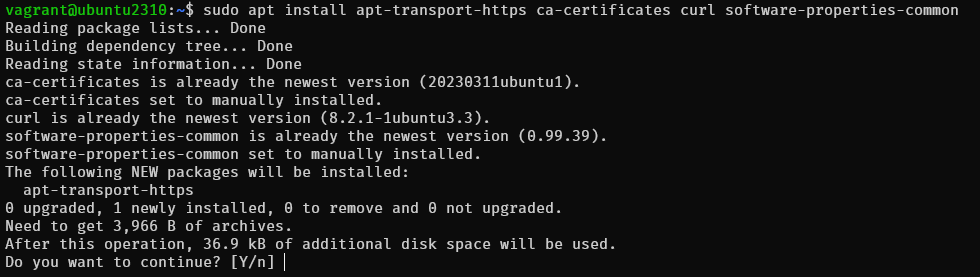

- Step 1 : Update the package index:

$ sudo apt update -y

- Step 2 : Install prerequisites:

$ sudo apt install apt-transport-https ca-certificates curl software-properties-common

- Step 3 : Add the Docker GPG key:

curl -fsSL https://download.docker.com/linux/ubuntu/gpg | sudo gpg --dearmor -o /usr/share/keyrings/docker-archive-keyring.gpg

- Step 4 : Add the Docker APT repository

echo "deb [arch=$(dpkg --print-architecture) signed-by=/usr/share/keyrings/docker-archive-keyring.gpg] https://download.docker.com/linux/ubuntu $(lsb_release -cs) stable" | sudo tee /etc/apt/sources.list.d/docker.list > /dev/null

- Step 5 : Update the package index again:

sudo apt update

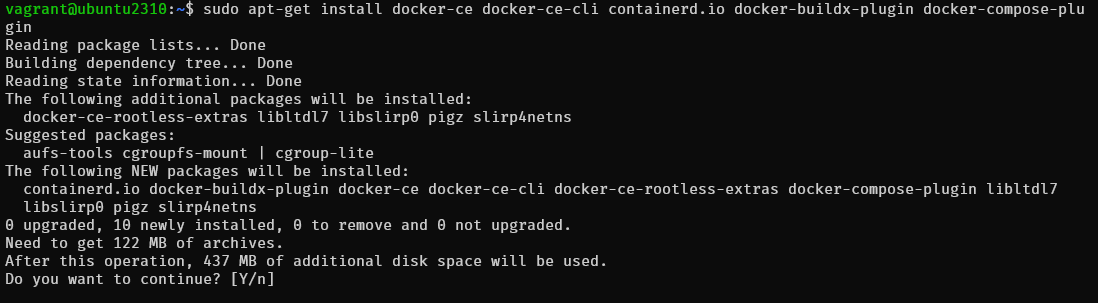

- Step 6 : Install latest version

$ sudo apt-get install docker-ce docker-ce-cli containerd.io docker-buildx-plugin docker-compose-plugin

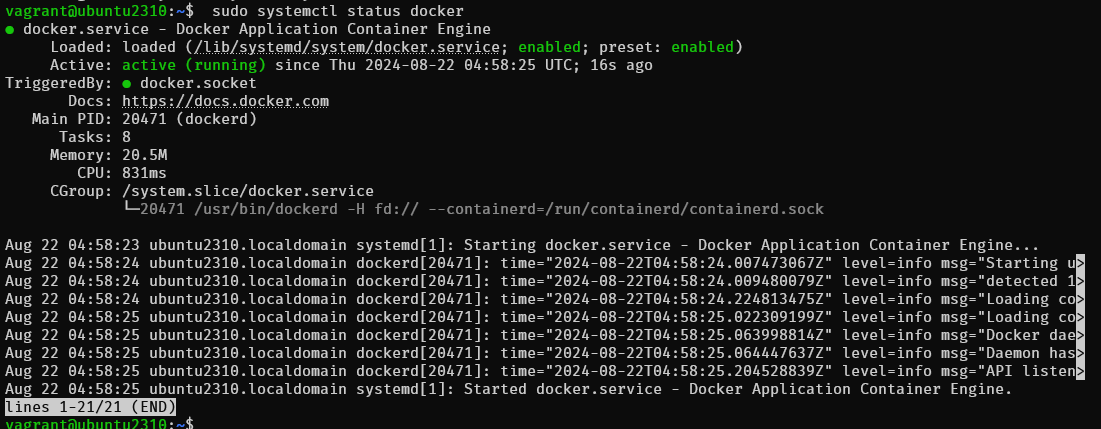

- Step 7 Start and Enable Docker Once the installation is complete, we can start and enable the Docker service. Run the following command:

$ sudo systemctl enable --now docker

$ sudo systemctl status docker

- Step 7 : Create Docker group To avoid having to use sudo each time we run Docker, it is recommended to add your user to the Docker group. To create the Docker group, run the following command:

$ sudo groupadd docker

groupadd: group 'docker' already exists

$ sudo usermod -aG docker $USER

$ newgrp docker

- Step 8 : Test

$ docker run hello-world

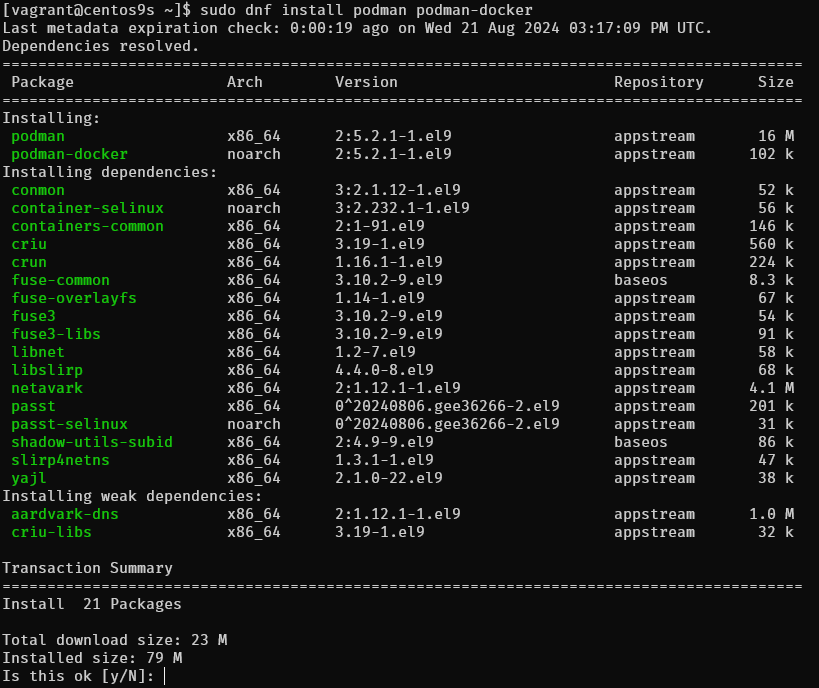

Install Docker on CentOs 9 Stream

$ sudo dnf install podman podman-docker

Install Docker on Ubuntu 2310

understand linux namespace

Objective:

This lab will introduce you to Linux namespaces, which are essential for containerization. We'll explore how namespaces isolate processes and their resources, making them ideal for running multiple containers on a single host. You'll learn how to create and manipulate namespaces using the unshare command and gain hands-on experience with Podman, a popular containerization tool.

Lab Steps:

- Understanding Namespaces

-

What are namespaces?

- Isolated environments for processes

- Provide resource isolation and security

- Types: PID, network, mount, IPC, UTS, user

-

How do they work? Each namespace has its own root filesystem, network stack, etc. Processes within a namespace cannot see or interact with resources outside of it.

-

types of namespaces:

- netns – network namespace

- ipcns – IPC namespace

- mntns – mount namespace

- utsns – UTS namespace

- userns – user namespace

- Creating Namespaces with unshare

- PID namespace:

- Isolate process IDs

- Create a child process with its own PID namespace:

[vagrant@centos9s ~]$ sudo unshare --fork --pid --mount-proc bash

[root@centos9s vagrant]# ps -o pid,pidns,netns,mntns,ipcns,utsns,userns,args -p 1

PID PIDNS NETNS MNTNS IPCNS UTSNS USERNS COMMAND

1 4026532299 4026531840 4026532298 4026531839 4026531838 4026531837 bash

# ps -o pid,pidns,netns,mntns,ipcns,utsns,userns,args -p 1

Apparently, PID 1 belongs to six different namespaces:

- PID

- network

- mount

- IPC

- UTS

- user

The /proc//ns Directory

[vagrant@centos9s ~]$ ps aux | grep vagrant

root 3851 0.0 0.3 19404 11520 ? Ss 13:20 0:00 sshd: vagrant [priv]

vagrant 3856 0.0 0.3 22644 13516 ? Ss 13:20 0:00 /usr/lib/systemd/systemd --user

vagrant 3858 0.0 0.1 108256 7476 ? S 13:20 0:00 (sd-pam)

vagrant 3865 0.0 0.1 19780 7460 ? S 13:20 0:00 sshd: vagrant@pts/0

vagrant 3866 0.0 0.1 8408 4992 pts/0 Ss 13:20 0:00 -bash

vagrant 4042 0.0 0.0 10104 3328 pts/0 R+ 13:38 0:00 ps aux

vagrant 4043 0.0 0.0 6428 2176 pts/0 R+ 13:38 0:00 grep --color=auto vagrant

Generally, the /proc/

For instance, let’s use ls to check the namespaces of the process with PID 3856:

total 0

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:20 cgroup -> 'cgroup:[4026531835]'

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:31 ipc -> 'ipc:[4026531839]'

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:31 mnt -> 'mnt:[4026531841]'

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:31 net -> 'net:[4026531840]'

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:31 pid -> 'pid:[4026531836]'

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:38 pid_for_children -> 'pid:[4026531836]'

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:38 time -> 'time:[4026531834]'

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:38 time_for_children -> 'time:[4026531834]'

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:31 user -> 'user:[4026531837]'

lrwxrwxrwx. 1 vagrant vagrant 0 Aug 20 13:31 uts -> 'uts:[4026531838]'

Lab: Exploring IP Netnamespaces with ip netns

Objective:

This lab will introduce you to IP network namespaces (netnamespaces) and how to manage them using the ip netns command. You'll learn how to create, list, delete, and interact with netnamespaces.

Lab Steps:

- Creating a Netnamespace

- Use the add command to create a new netnamespace:

[vagrant@centos9s ~]$ sudo ip netns add red

[vagrant@centos9s ~]$ sudo ip netns add blue

- Listing Netnamespaces

- Use the list command to view all existing netnamespaces:

[vagrant@centos9s ~]$ sudo ip netns list

- list network on host

[vagrant@centos9s ~]$ ip link

- view network namespace inside namespace

[vagrant@centos9s ~]$ sudo ip netns exec red ip link

[vagrant@centos9s ~]$ sudo ip netns exec blue ip link

- Entering a Netnamespace

- Use the exec command to enter a netnamespace and execute commands within it:

[vagrant@centos9s ~]$ ip netns exec red bash

[vagrant@centos9s ~]$ ps -o pid,pidns,args

or run in short command

ip -n red link

ip -n blue link

- Run arp command on host check Arp table

[vagrant@centos9s ~]$ arp

- Run route command check routing table

[vagrant@centos9s ~]$ route

- Configuring Network Interfaces

- Create a virtual network interface within the netnamespace:

[vagrant@centos9s ~]$ sudo ip link add veth-red type veth peer name veth-blue

[vagrant@centos9s ~]$ sudo ip link set veth-red netns red

[vagrant@centos9s ~]$ sudo ip link set veth-blue netns blue

- Add ip to veth-red and veth-blue

[vagrant@centos9s ~]$ sudo ip -n red addr add 192.168.15.1/24 dev veth-red

[vagrant@centos9s ~]$ sudo ip -n blue addr add 192.168.15.2/24 dev veth-blue

[vagrant@centos9s ~]$ sudo ip -n red link set veth-red up

[vagrant@centos9s ~]$ sudo ip -n blue link set veth-blue up

if Clear ip (Just in case some thing wrong)

# ip -n red addr flush dev veth-red # ip -n blue addr flush dev veth-blue

- Check

[vagrant@centos9s ~]$ sudo ip netns exec red ip a

[vagrant@centos9s ~]$ sudo ip netns exec blue ip a

- ping ip address from red to blue, and blue to red

[vagrant@centos9s ~]$ sudo ip netns exec blue ping 192.168.15.1

[vagrant@centos9s ~]$ sudo ip netns exec red ping 192.168.15.2

- Check arp table on red and blue

[vagrant@centos9s ~]$ sudo ip netns exec red arp

[vagrant@centos9s ~]$ sudo ip netns exec blue arp

- But arp table on host will see this

[vagrant@centos9s ~]$ arp

- Final script 1

ip netns add red

ip netns add blue

#show namespace

ip netns show

ip link add veth-red type veth peer name veth-blue

ip link set veth-red netns red

ip link set veth-blue netns blue

ip -n red addr add 192.168.15.1/24 dev veth-red

ip -n blue addr add 192.168.15.2/24 dev veth-blue

ip -n red link set veth-red up

ip -n blue link set veth-blue up

ip netns exec red ping 192.168.15.2

ip netns exec red ping 192.168.15.1

#Cleanup

ip netns delete red

ip netns delete blue

Connect more than 2 namespace

- Create virtual switch with linux bridge (or OpenVswitch) and connect namespace together via bridge

[vagrant@centos9s ~]$ sudo ip link add v-net-0 type bridge

[vagrant@centos9s ~]$ ip a

[vagrant@centos9s ~]$ sudo ip link set dev v-net-0 up

- install package

bridge-utils

[vagrant@centos9s ~]$ sudo dnf install brige-utils

[vagrant@centos9s ~]$ brctl show

- Delete old veth-red, veth-blue link because we not use anymore

[vagrant@centos9s ~]$ sudo ip -n red link del veth-red

delete veth-red and veth-blue will automatically remove

- Create new cable connect namespace to bride

[vagrant@centos9s ~]$ sudo ip link add veth-red type veth peer name veth-red-br

[vagrant@centos9s ~]$ sudo ip link add veth-blue type veth peer name veth-blue-br

- Add cable to bride

[vagrant@centos9s ~]$ sudo ip link set veth-red netns red

[vagrant@centos9s ~]$ sudo ip link set veth-red-br master v-net-0

[vagrant@centos9s ~]$ sudo ip link set veth-blue netns blue

[vagrant@centos9s ~]$ sudo ip link set veth-blue-br master v-net-0

- Set ip address and turn it up

[vagrant@centos9s ~]$ sudo ip -n red addr add 192.168.15.1/24 dev veth-red

[vagrant@centos9s ~]$ sudo ip -n blue addr add 192.168.15.2/24 dev veth-blue

[vagrant@centos9s ~]$ sudo ip -n red link set veth-red up

[vagrant@centos9s ~]$ sudo ip -n blue link set veth-blue up

[vagrant@centos9s ~]$ sudo ip link set veth-red-br up

[vagrant@centos9s ~]$ sudo ip link set veth-blue-br up

- Test ping

[vagrant@centos9s ~]$ sudo ip netns exec red ping 192.168.15.2

[vagrant@centos9s ~]$ sudo ip netns exec blue ping 192.168.15.1

- Run brctl show again

[vagrant@centos9s ~]$ brctl show

- Final summary script connect namespace with linux bridge

ip netns add red

ip netns add blue

#show namespace

ip netns show

ip link add v-net-0 type bridge

ip link set dev v-net-0 up

ip link add veth-red type veth peer name veth-red-br

ip link add veth-blue type veth peer name veth-blue-br

ip link set veth-red netns red

ip link set veth-red-br master v-net-0

ip link set veth-blue netns blue

ip link set veth-blue-br master v-net-0

ip -n red addr add 192.168.15.1/24 dev veth-red

ip -n blue addr add 192.168.15.2/24 dev veth-blie

ip -n red link set veth-red up

ip -n blue link set veth-blue up

ip link set veth-red-br up

ip link set veth-blue-br up

ip netns vs. unshare: A Comparison

Both ip netns and unshare are tools used to create and manage isolated environments (namespaces) on Linux systems, but they serve different purposes and have distinct functionalities.

ip netns

- Purpose: Primarily designed for network namespace management.

- Functionality:

- Creates, lists, deletes, and manipulates network namespaces.

- Configures network interfaces, routes, and other network-related settings within namespaces.

- Provides a high-level interface for network namespace management.

unshare

- Purpose: A more general-purpose tool for creating various types of namespaces, including PID, network, mount, IPC, UTS, and user namespaces.

- Functionality:

- Creates child processes with specific namespaces.

- Allows for granular control over namespace creation and configuration.

- Can be used to isolate processes in a variety of ways beyond just networking.

Key Differences

- Scope: ip netns is specifically focused on network namespaces, while unshare can create and manage multiple types of namespaces.

- Level of Control: unshare provides more granular control over namespace creation and configuration, allowing you to specify which namespaces to isolate and how.

- Interface: ip netns offers a more user-friendly interface for managing network namespaces, while unshare is more flexible but requires a deeper understanding of namespace concepts.

When to Use Which

- Network Namespace Management: Use ip netns when you primarily need to create, manage, and configure network namespaces.

- General Namespace Creation: Use unshare when you need to isolate processes in a variety of ways, including PID, mount, IPC, UTS, or user namespaces.

In summary, ip netns is a specialized tool for network namespace management, while unshare is a more general-purpose tool for creating various types of namespaces. The best choice depends on your specific needs and the level of control you require.

Docker Docker Compose

Docker

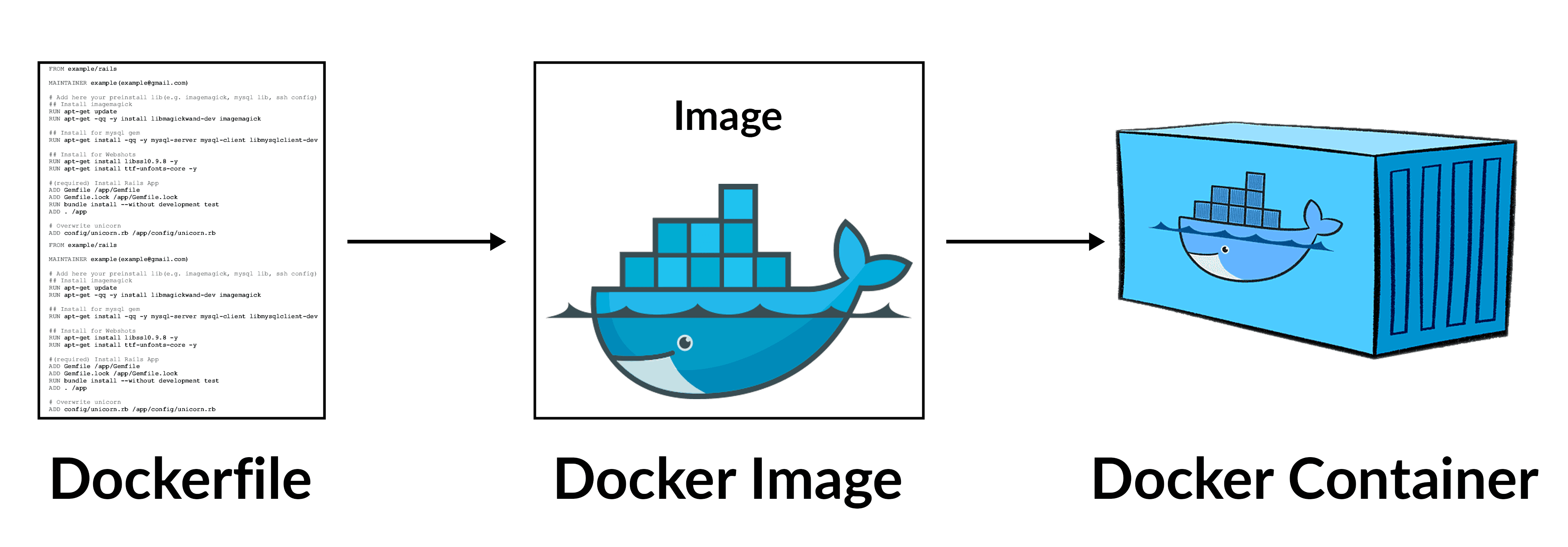

Docker is a powerful Opensource platform that simplifies the process of developing, packaging, and deploying applications by using containers. It provides an isolated environment, known as a container, where applications can run consistently across different platforms. Containers are isolated from one another and bundle their own software, libraries, and configuration files

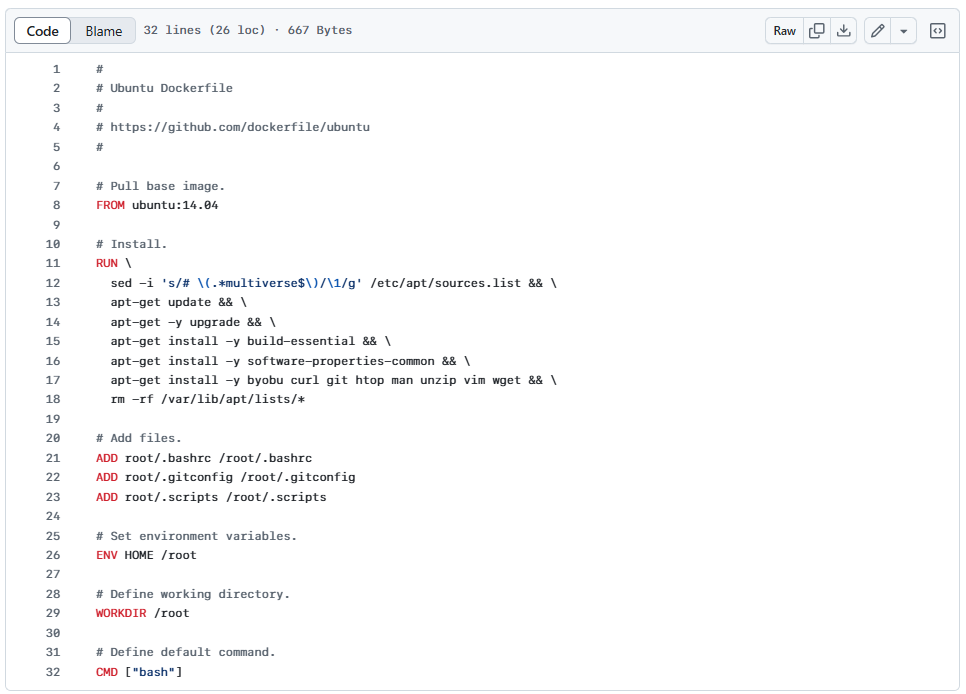

Dockerfile

A Dockerfile is a plain text file that contains instructions for building Docker images. There’s a Dockerfile standard they follow, and the Docker daemon is ultimately responsible for executing the Dockerfile and generating the image.

A typical Dockerfile usually starts by including another image. For example, it might build on a specific operating system or Java distribution.

From there, a Dockerfile can perform various operations to build an image

Format

https://docs.docker.com/reference/dockerfile/ https://docs.docker.com/build/building/best-practices/ Here is the format of the Dockerfile:

# Comment

INSTRUCTION arguments

Example

FROM busybox

ENV FOO=/bar

ARG CODE_VERSION=latest

WORKDIR ${FOO} # WORKDIR /bar

ADD . $FOO # ADD . /bar

COPY \$FOO /quux # COPY $FOO /quux

ENTRYPOINT ["/bin/ping"]

CMD ["localhost"]

The ENTRYPOINT specifies a command that will always be executed when the container starts. The CMD specifies arguments that will be fed to the ENTRYPOINT. CMD will be overridden when running the container with alternative arguments.

Docker Compose

Docker Compose is a tool for defining and running multi-container Docker applications. Using a YAML configuration file, Docker Compose allows us to configure multiple containers in one place. We can then start and stop all of those containers at once using a single command.

Additionally, Docker Compose allows us to define common objects shared by containers. For example, we can define a volume once and mount it inside every container, so that they share a common file system. Or, we can define the network used by one or more containers to communicate.

Docker command

Here are some common Docker commands you might use:

-

docker build: Builds a Docker image from a Dockerfile.

-

docker run: Runs a command in a new container.

-

docker ps: Lists running containers.

-

docker stop: Stops one or more running containers.

-

docker rm: Removes one or more containers.

-

docker images: Lists the Docker images available on your system.

-

docker pull: Pulls an image from a registry.

-

docker push: Pushes an image to a registry.

Prepare VM

mkdir Docker

cd Docker

code Vagrantfile

content:

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.box = "generic/centos9s"

config.vm.network "forwarded_port", guest: 80, host: 8080

config.vm.network "private_network", ip: "192.168.33.10"

# config.vm.network "public_network"

# config.vm.synced_folder "../data", "/vagrant_data"

#config.vm.synced_folder ".", "/vagrant"

config.vm.provider "virtualbox" do |vb|

vb.memory = "2048"

vb.cpus = 2

end

config.vm.provision "shell", inline: <<-SHELL

SHELL

end

- Run start vagrant

vagrant up

vagrant ssh

- After Finish class

vagrant halt

vagrant destroy

Key understanding

- Docker has a default entrypoint which is /bin/sh -c but does not have a default command.

$ docker run -i -t ubuntu bash

// result

root@267a4961b03c:/# exit

- Run image is ubuntu, command is bash

- Container will gets executed is /bin/sh -c bash

- Run with out bash

[vagrant@centos9s lab2]$ docker run -i -t ubuntu

root@6725f7472f23:/#

- Result will we the same result because in Dockerfile of ubuntu CMD is specified CMD ["bash"]

So Remeber. When using the CMD instruction, it is exactly as if you were executing

docker run -i -t ubuntu

<cmd>

The parameter of the entrypoint is <cmd>.

By default there's no ENTRYPOINT; whether a shell is used depends on the used form of the CMD Later on, people asked to be able to customize this, so ENTRYPOINT and --entrypoint were introduced. so The ENTRYPOINT specifies a command that will always be executed when the container starts. The CMD specifies arguments that will be fed to the ENTRYPOINT.

Lab1

cd ~

mkdir lab1

cd lab1

cat <<EOF | tee Dockerfile

FROM ubuntu

# Install ping

RUN apt-get update && apt-get install -y iputils-ping

ENTRYPOINT ["/bin/ping"]

CMD ["localhost"]

EOF

cat Dockerfile

- Create image from dockerfile

docker build -t lab1 .

- list image

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

lab1 latest 6734c52ab144 4 weeks ago 78.1MB

- Run

format command

docker run image-id

$ docker run lab1

PING localhost (::1) 56 data bytes

64 bytes from localhost (::1): icmp_seq=1 ttl=64 time=0.027 ms

64 bytes from localhost (::1): icmp_seq=2 ttl=64 time=0.086 ms

64 bytes from localhost (::1): icmp_seq=3 ttl=64 time=0.089 ms

64 bytes from localhost (::1): icmp_seq=4 ttl=64 time=0.096 ms

#ctrl-c to exit

Summary: ping localhost is default command when run container

$ docker run lab1 google.com

PING google.com (142.250.199.14) 56(84) bytes of data.

64 bytes from kul09s14-in-f14.1e100.net (142.250.199.14): icmp_seq=1 ttl=109 time=27.6 ms

64 bytes from kul09s14-in-f14.1e100.net (142.250.199.14): icmp_seq=2 ttl=109 time=27.1 ms

64 bytes from kul09s14-in-f14.1e100.net (142.250.199.14): icmp_seq=3 ttl=109 time=27.7 ms

64 bytes from kul09s14-in-f14.1e100.net (142.250.199.14): icmp_seq=4 ttl=109 time=28.0 ms

#ctrl-c

Summary: google.com will overide CMD arg in container

remove all Docker containers, you can use the following commands.

- Step1 Stop all running container

$ docker stop $(docker ps -q)

- Step2 Remove all containers: After stopping them, you can remove all containers with this command:

$ docker rm $(docker ps -a -q)

Explanation:

- docker ps -q: Lists the IDs of all running containers.

- docker ps -a -q: Lists the IDs of all containers, including stopped ones.

- docker stop $(docker ps -q): Stops all running containers by passing their IDs to the docker stop command.

- docker rm $(docker ps -a -q): Removes all containers by passing their IDs to the docker rm command.

Lab2

cd ~

mkdir lab2

cd lab2

cat <<EOF | tee Dockerfile

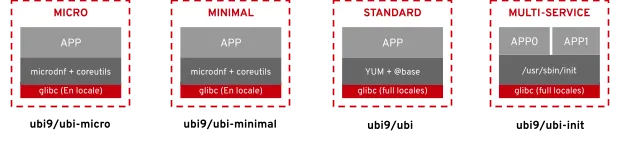

FROM registry.access.redhat.com/ubi9/ubi

RUN yum update -y && yum install iputils -y

WORKDIR /

CMD ["echo", "Hello Docker"]

EOF

cat Dockerfile

$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

lab2 latest 8a6e9d1d5a47 8 days ago 213MB

- Run

$ docker run lab2

$ docker run lab2 ping google.com

Summary: ping google.com will overide cmd

Both CMD and ENTRYPOINT instructions define what command gets executed when running a container. There are few rules >that describe their co-operation.

1 Dockerfile should specify at least one of CMD or ENTRYPOINT commands.

2 ENTRYPOINT should be defined when using the container as an executable.

3 CMD should be used as a way of defining default arguments for an ENTRYPOINT command or for executing an ad-hoc >command in a container.

4 CMD will be overridden when running the container with alternative arguments.

Please remove all running

$ docker stop $(docker ps -q)

$ docker rm $(docker ps -a -q)

lab3

$ cd ~

$ mkdir redis-server

$ cd redis-server

$ cat <<EOF | tee Dockerfile

FROM ubuntu:24.04

RUN apt-get update && \

apt-get install -y redis-server && \

apt-get clean

EXPOSE 6379

CMD ["redis-server", "--protected-mode no"]

EOF

$ cat Dockerfile

- Build image

$ docker build -t redis-server .

- Run image

$ docker run -d -p 6379:6379 --name redis redis-server

$ docker container ls

Running Mysql container

- pull mysql image

$ docker pull mysql:latest

$ docker images

- Run mysql container

$ docker run --name test-mysql -e MYSQL_ROOT_PASSWORD=strong_password -d mysql

$ docker ps

-

run: creates a new container or starts an existing one

-

--name CONTAINER_NAME: gives the container a name. The name should be readable and short. In our case, the name is test-mysql.

-

-e ENV_VARIABLE=value: the -e tag creates an environment variable that will be accessible within the container. It is crucial to set MYSQL_ROOT_PASSWORD so that we can run SQL commands later from the container. Make sure to store your strong password somewhere safe (not your brain).

-

-d: short for detached, the -d tag makes the container run in the background. If you remove this tag, the command will keep printing logs until the container stops.

-

image_name: the final argument is the image name the container will be built from. In this case, our image is mysql.

-

Access to terminal inside container

docker exec -it container_name bash

$ docker exec -it test-mysql bash

// result

bash-5.1# mysql -u root -p

Enter password: ...

mysql> show databases;

mysql> \q

Bye

bash-5.1# exit

exit

- Stop and delete container

$ docker stop test-mysql

$ docker rm test-mysql

- Start container again with port mapping

$ docker run -d --name test-mysql -e MYSQL_ROOT_PASSWORD=strong_password -p 3306:3306 mysql

Check port

$ docker port test-mysql

3306/tcp -> 0.0.0.0:3306

- install mysql client in vagrant

$ sudo dnf install mysql

- Start connect to mysql at port 3306

$ mysql --host=127.0.0.1 --port=3306 -u root -p

Enter password:

- Remove

$ docker stop test-mysql; docker rm test-mysql

- Configuration mysql container

cd ~

mkdir -p test-mysql/config

cd test-mysql

pwd

$ docker run \

--name test-mysql \

-v ./test-mysql/config:/etc/mysql/conf.d \

-e MYSQL_ROOT_PASSWORD=strong_password \

-d mysql

- Preserve Data after delete docker container

Persisting data stored in your MySQL containers is crucial for many reasons:

-

Data persistence: When you stop or remove a container, all data is lost, including your database. Decoupling the data from the container makes it always accessible.

-

Sharing data between containers: Detaching the data from the container allows multiple containers to have access to it. This way, you can avoid data duplication and simplify synchronization between projects that use the same data.

-

Portability and backup: persisted data can be easily backed up and shared independently, providing a reliable way to recover from data loss or accidental deletion.

-

Improved performance and scalability: By storing frequently accessed data to persistent storage like SSDs, you can improve the performance of your application compared to relying on the container’s writable layer, which is typically slower.

-

1 create volume

$ docker volume create test-mysql-data

$ docker volume ls

$ docker stop test-mysql; docker rm test-mysql

$ docker run \

--name test-mysql \

-v test-mysql-data:/var/lib/mysql \

-e MYSQL_ROOT_PASSWORD=strong_password \

-d mysql

- 2 inspect volume

$ docker inspect test-mysql-data

[

{

"CreatedAt": "2024-09-05T00:28:33Z",

"Driver": "local",

"Labels": null,

"Mountpoint": "/var/lib/docker/volumes/test-mysql-data/_data",

"Name": "test-mysql-data",

"Options": null,

"Scope": "local"

}

]

$ ls /var/lib/docker/volumes/test-mysql-data/_data

- 3 Clean all

$ docker stop test-mysql; docker rm test-mysql

$ docker volume rm test-mysql-data

Postgresql Container

- Step1 pull image https://hub.docker.com/_/postgres/

$ docker pull postgres

- Step2 Run container

$ docker run --name my-postgres -e POSTGRES_PASSWORD=my_password -d -p 5432:5432 postgres

$ docker ps

-

Explaination

- my-postgres is the name of the container (you can choose a different name if you prefer).

- my_password is the password you want to set for the “postgres” user in PostgreSQL.

- The -d option runs the container in the background.

- The -p 5432:5432 option maps port 5432 from the container to port 5432 on the host, allowing you to connect to PostgreSQL from the host.

-

Step3 check port

$ docker port my-postgres

5432/tcp -> 0.0.0.0:5432

- Step4 Access point

pull image postgres addmin

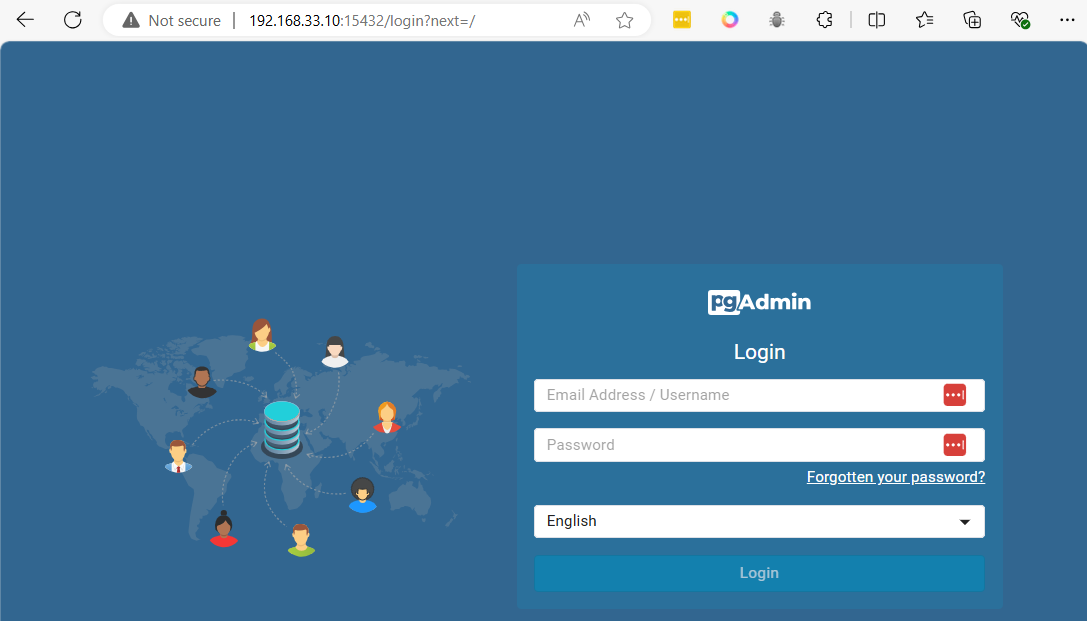

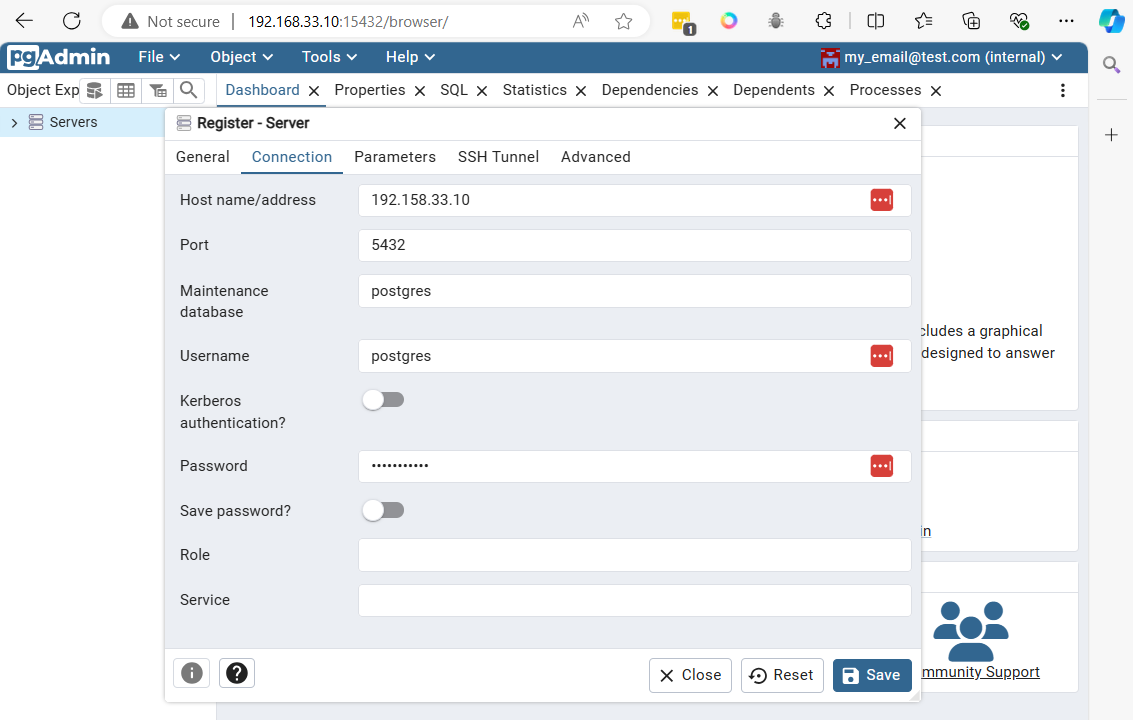

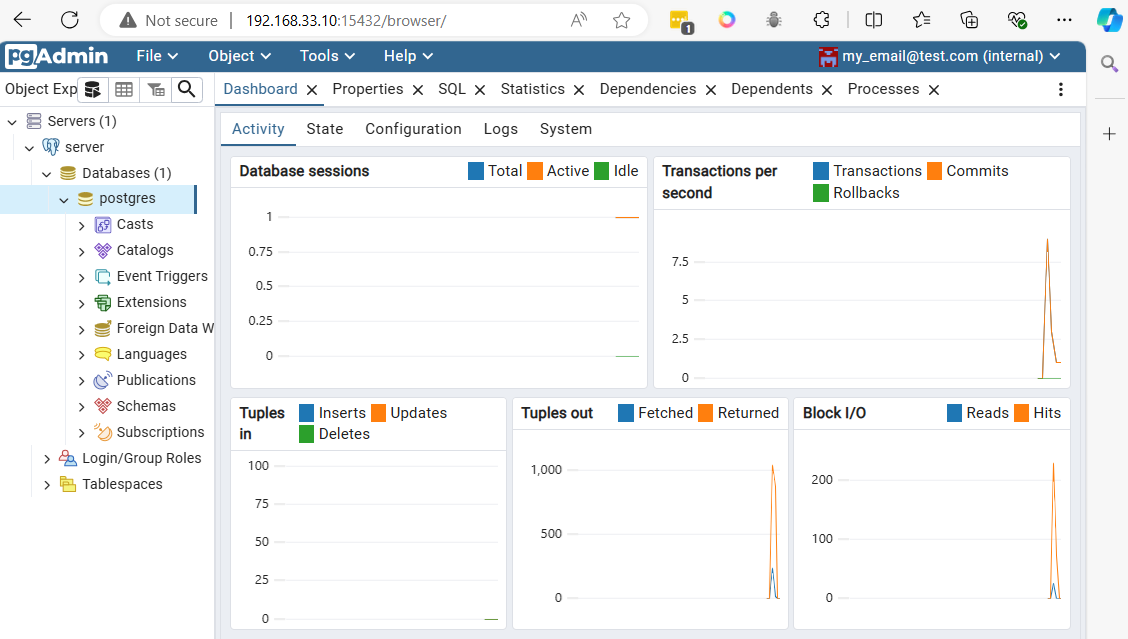

- Step 5 Run

docker run --name test-pgadmin -p 15432:80 -e "PGADMIN_DEFAULT_EMAIL=my_email@test.com" -e "PGADMIN_DEFAULT_PASSWORD=my_password" -d dpage/pgadmin4

-

Explaination

- test-pgadmin is the name of the container being created.

- The -p 15432:80 option maps port 15432, which is used for communication with pgAdmin, to port 80.

- PGADMIN_DEFAULT_EMAIL will be the login you use to access pgAdmin.

- PGADMIN_DEFAULT_PASSWORD will be the password you use to access pgAdmin.

-

prepare firewall-cmd

sudo firewall-cmd --permanent --add-port=5432/tcp

sudo firewall-cmd --reload

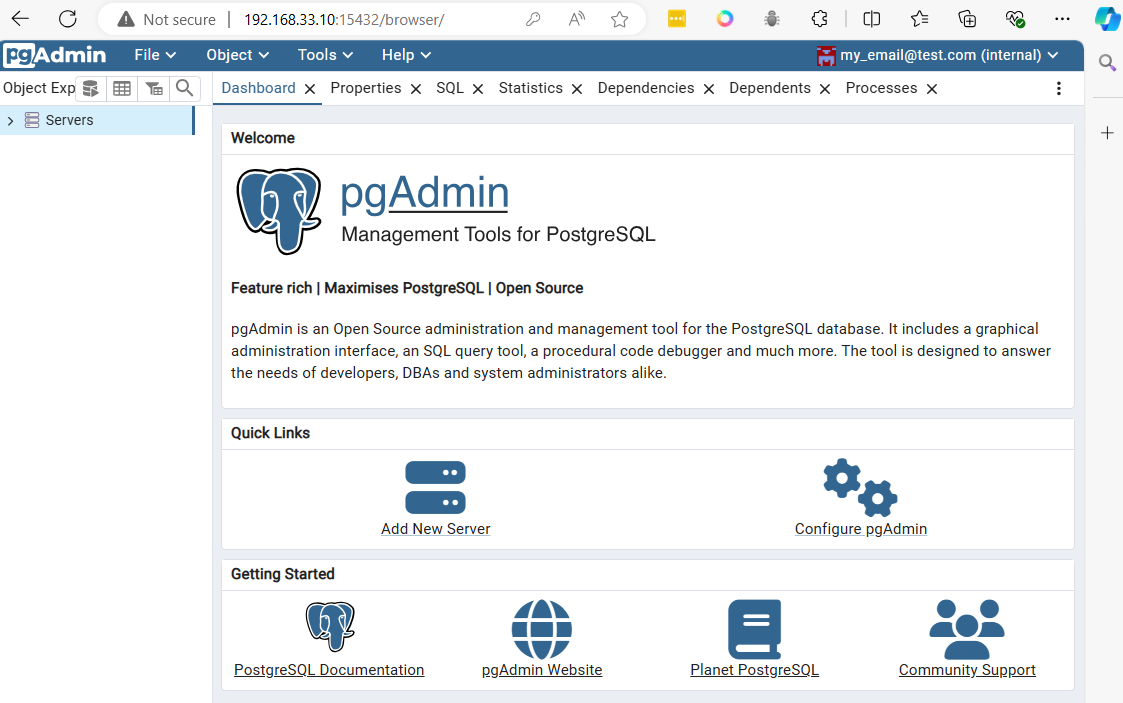

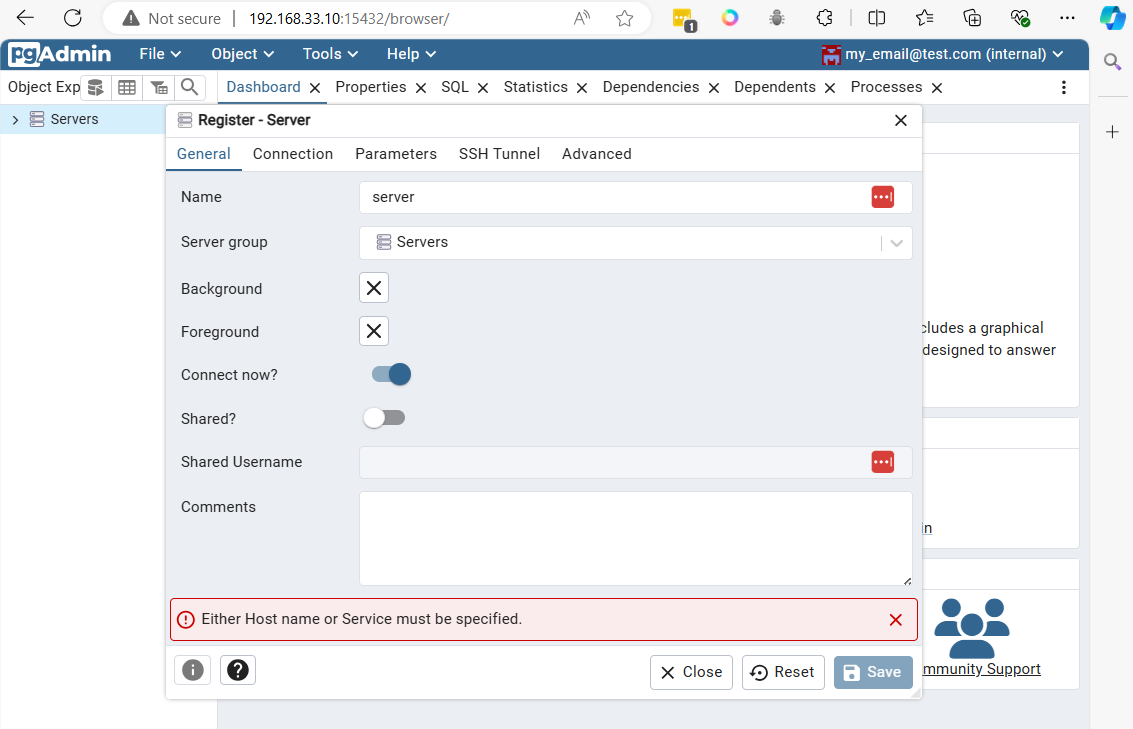

access to dashboard

http://192.168.33.10:15432/

After logging in with the defined email (my_email@test.com) and password (my_password), the main panel will appear

Connect to postgresql from vagrant command line

sudo ss -tulpn | grep 5432

sudo dnf install postgresql

connnect with command line

PGPASSWORD=my_password psql -h localhost -p 5432 -U postgres

// result

psql (13.16, server 16.4 (Debian 16.4-1.pgdg120+1))

WARNING: psql major version 13, server major version 16.

Some psql features might not work.

Type "help" for help.

postgres=# \q

$ PGPASSWORD=my_password psql -h localhost -p 5432 -U postgres -c '\l'

List of databases

Name | Owner | Encoding | Collate | Ctype | Access privileges

-----------+----------+----------+------------+------------+-----------------------

postgres | postgres | UTF8 | en_US.utf8 | en_US.utf8 |

template0 | postgres | UTF8 | en_US.utf8 | en_US.utf8 | =c/postgres +

| | | | | postgres=CTc/postgres

template1 | postgres | UTF8 | en_US.utf8 | en_US.utf8 | =c/postgres +

| | | | | postgres=CTc/postgres

(3 rows)

- Clean all

$ docker stop $(docker ps -q)

$ docker rm $(docker ps -a -q)

Create Dockerfile

$ mkdir postgresq

$ cd postgres

$ cat <<EOF | tee docker-compose.yml

services:

postgres:

image: postgres

environment:

POSTGRES_PASSWORD: my_password

ports:

- "5432:5432"

volumes:

- pgdata:/var/lib/postgresql/data

networks:

- pg_network

pgadmin:

image: dpage/pgadmin4

environment:

PGADMIN_DEFAULT_EMAIL: my_email@test.com

PGADMIN_DEFAULT_PASSWORD: my_password

ports:

- "15432:80"

networks:

- pg_network

networks:

pg_network:

volumes:

pgdata:

EOF

$ cat docker-compose.yml

- Docker compose

$ docker compose up -d

[+] Running 4/4

✔ Network postgres_default Created 0.5s

✔ Volume "postgres_pgdata" Created 0.0s

✔ Container postgres-postgres-1 Started 0.5s

✔ Container postgres-pgadmin-1 Started 0.5s

- Clean

$ docker stop $(docker ps -q)

$ docker rm $(docker ps -a -q)

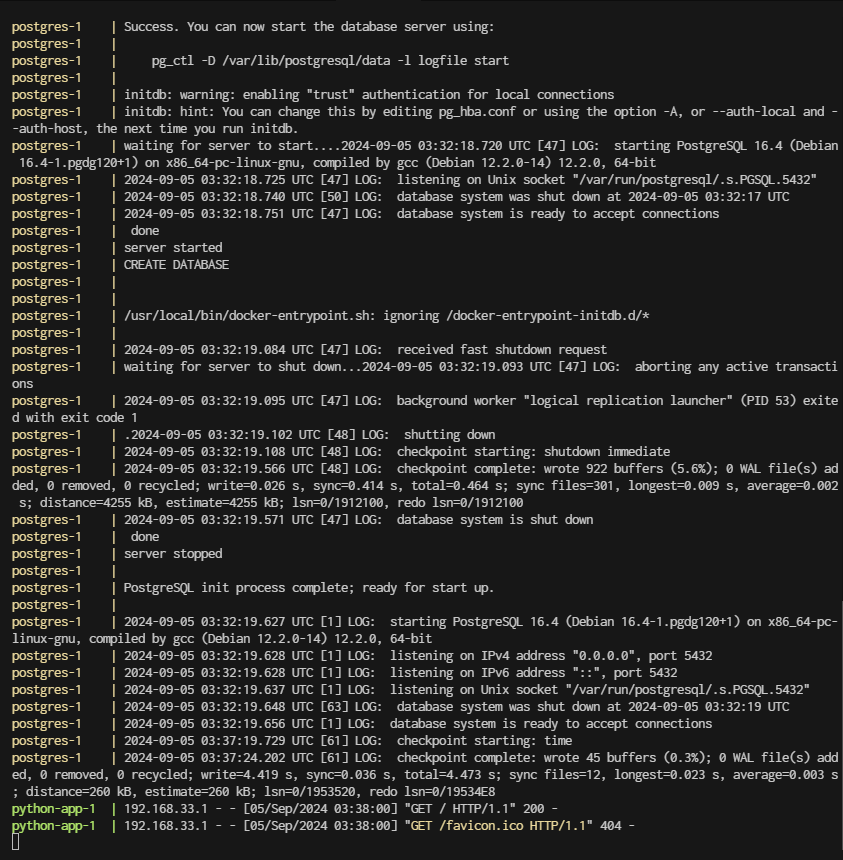

Containerize Application

Application structure

.

├── docker-compose.yml

├── node-app

│ ├── Dockerfile

│ ├── app.js

│ ├── package.json

└── python-app

├── Dockerfile

└── app.py

create application

cd ~

mkdir lab-python-app

cd lab-python-app

mkdir {node-app,python-app}

-copy paste code below to terminal: create file: docker-compose.yml

cat <<EOF | tee docker-compose.yml

services:

postgres:

image: postgres

environment:

POSTGRES_USER: my_user

POSTGRES_PASSWORD: my_password

POSTGRES_DB: my_database

ports:

- "5432:5432"

volumes:

- pgdata:/var/lib/postgresql/data

networks:

- app_network

node-app:

build: ./node-app

environment:

DB_HOST: postgres

DB_USER: my_user

DB_PASSWORD: my_password

DB_NAME: my_database

depends_on:

- postgres

ports:

- "3000:3000"

networks:

- app_network

python-app:

build: ./python-app

environment:

DB_HOST: postgres

DB_USER: my_user

DB_PASSWORD: my_password

DB_NAME: my_database

depends_on:

- postgres

ports:

- "5000:5000"

networks:

- app_network

networks:

app_network:

volumes:

pgdata:

EOF

-copy paste code below to terminal: create file: node-app/Dockerfile

cat <<EOF | tee node-app/Dockerfile

FROM node:22

WORKDIR /usr/src/app

COPY package*.json ./

RUN npm install

COPY . .

EXPOSE 3000

CMD ["node", "app.js"]

EOF

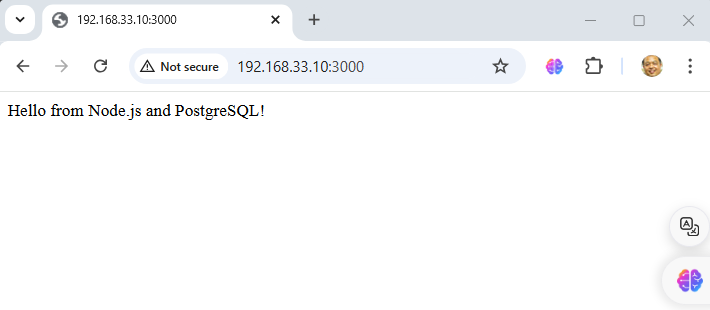

-copy paste code below to terminal: create file: node-app/app.js

cat <<EOF | tee node-app/app.js

const { Client } = require('pg');

const client = new Client({

host: process.env.DB_HOST,

user: process.env.DB_USER,

password: process.env.DB_PASSWORD,

database: process.env.DB_NAME,

});

client.connect()

.then(() => console.log('Connected to PostgreSQL from Node.js!'))

.catch(err => console.error('Connection error', err.stack));

const express = require('express');

const app = express();

const port = 3000;

app.get('/', (req, res) => {

res.send('Hello from Node.js and PostgreSQL!');

});

app.listen(port, () => {

console.log(`Node.js app listening at http://localhost:${port}`);

});

EOF

-copy paste code below to terminal: create file: node-app/package.json

cat <<EOF | tee node-app/package.json

{

"name": "node-app",

"version": "1.0.0",

"description": "A simple Node.js app with PostgreSQL",

"main": "app.js",

"scripts": {

"start": "node app.js"

},

"dependencies": {

"express": "^4.17.1",

"pg": "^8.7.1"

}

}

EOF

-copy paste code below to terminal: create file: python-app/Dockerfile

cat <<EOF | tee python-app/Dockerfile

FROM python:3.12

WORKDIR /usr/src/app

COPY requirements.txt ./

RUN pip install --no-cache-dir -r requirements.txt

COPY . .

EXPOSE 5000

CMD ["python", "./app.py"]

EOF

-copy paste code below to terminal: create file: python-app/requirements.txt

cat <<EOF | tee python-app/requirements.txt

flask

psycopg2

EOF

-copy paste code below to terminal: create file: python-app/app.py

cat <<EOF | tee python-app/app.py

from flask import Flask

import psycopg2

import os

app = Flask(__name__)

def connect_db():

conn = psycopg2.connect(

host=os.getenv("DB_HOST"),

database=os.getenv("DB_NAME"),

user=os.getenv("DB_USER"),

password=os.getenv("DB_PASSWORD")

)

return conn

@app.route('/')

def hello():

try:

conn = connect_db()

cursor = conn.cursor()

cursor.execute('SELECT version()')

db_version = cursor.fetchone()

cursor.close()

conn.close()

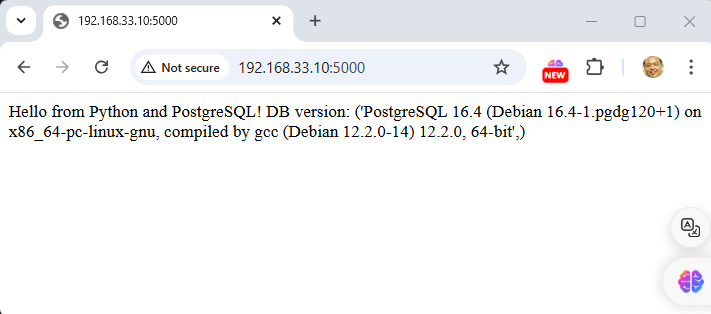

return f"Hello from Python and PostgreSQL! DB version: {db_version}"

except Exception as e:

return str(e)

if __name__ == '__main__':

app.run(host='0.0.0.0', port=5000)

EOF

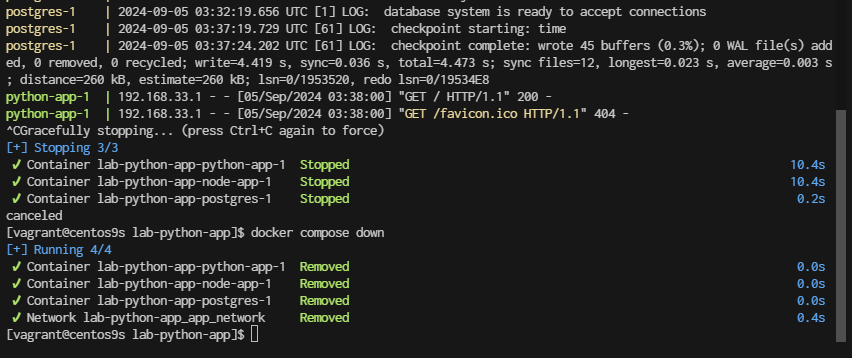

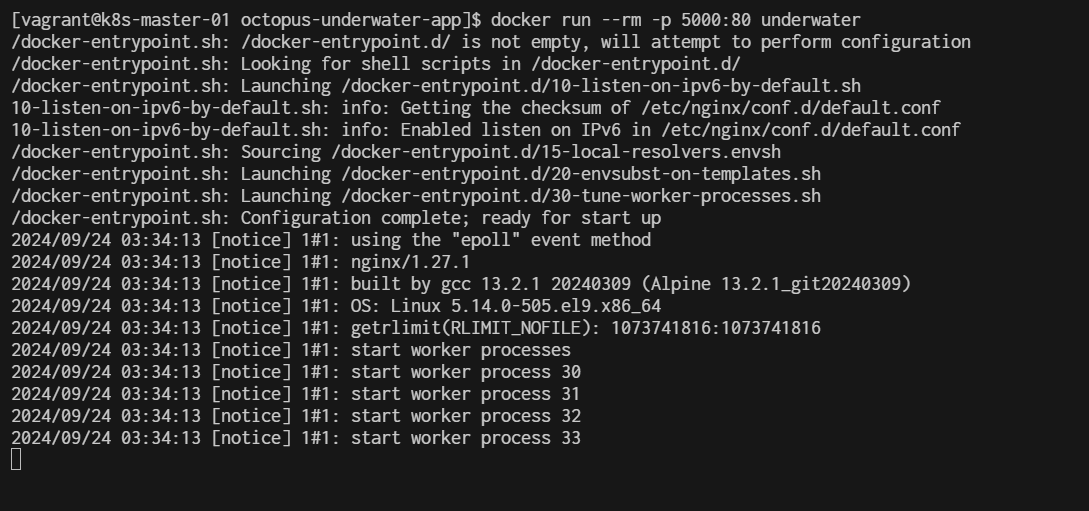

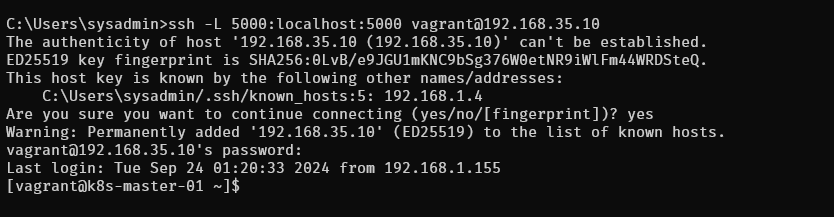

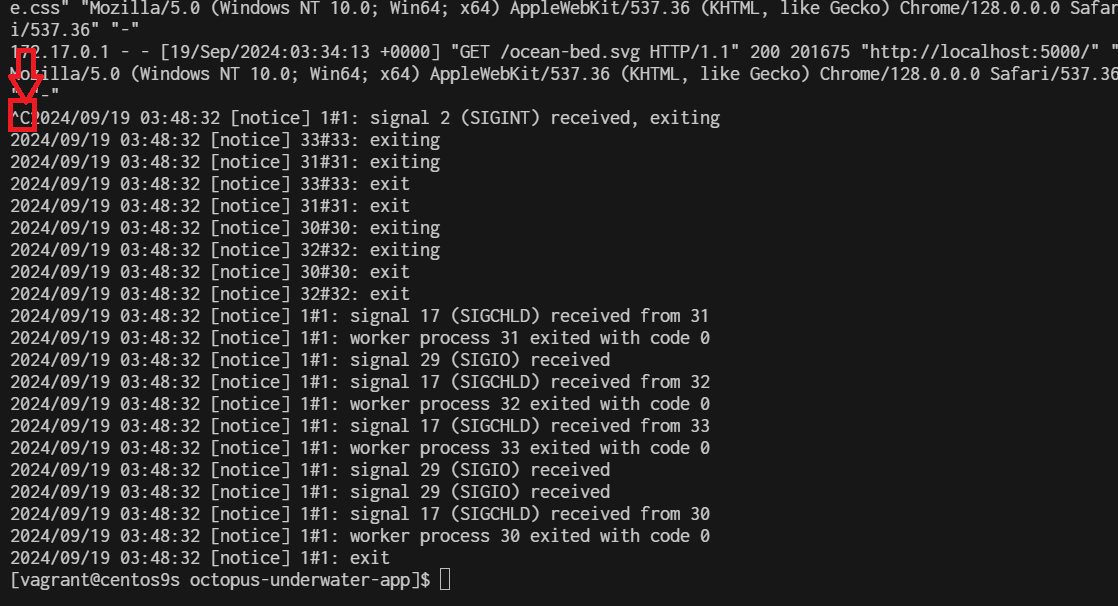

Steps to Run: Build and run the services:

docker compose up --build

Command Usage:

-

docker-compose up --build: This command builds the images and starts the containers. It’s useful when you’ve made changes to your Dockerfiles or the application code, and you need to rebuild the images.

-

docker-compose up -d: This command starts the containers in detached mode (background) using the existing images. If you have made changes and want to ensure the latest images are used, you should run docker-compose up --build first. After the initial build, subsequent runs with docker-compose up -d will use the existing images unless the Dockerfile or docker-compose.yml file changes.

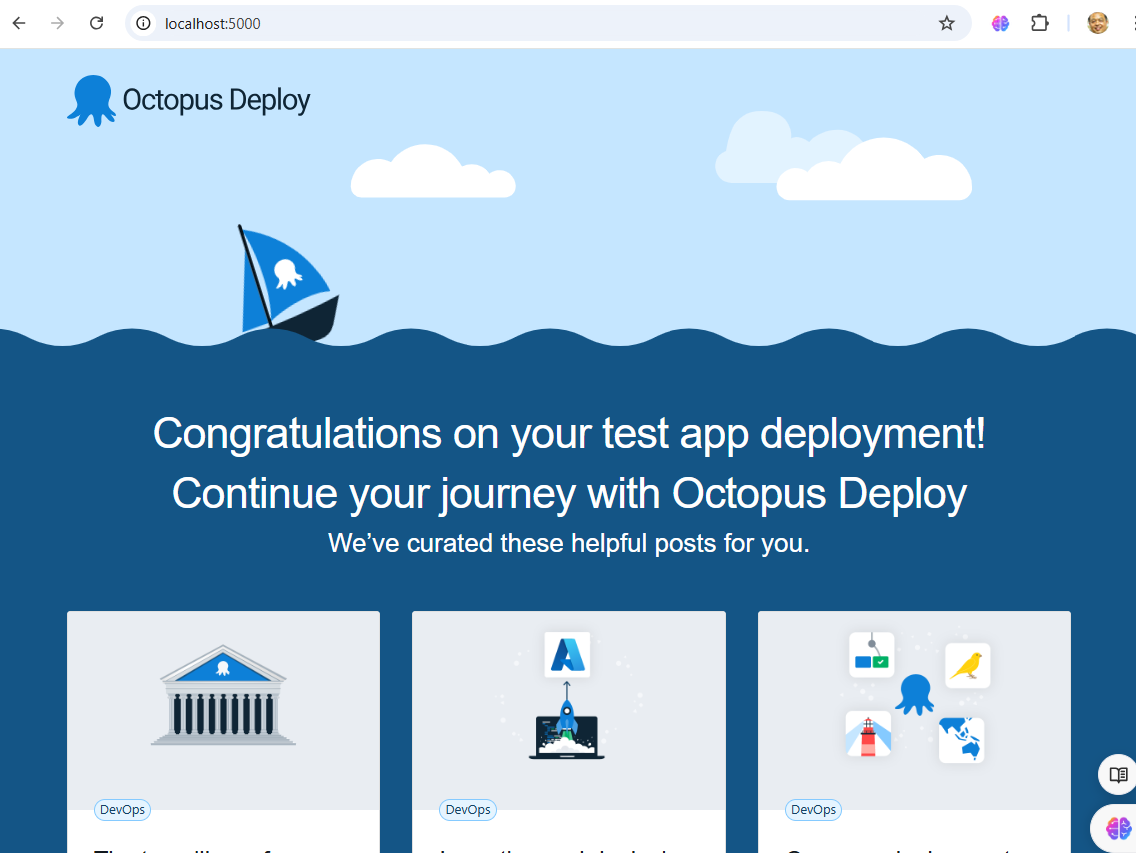

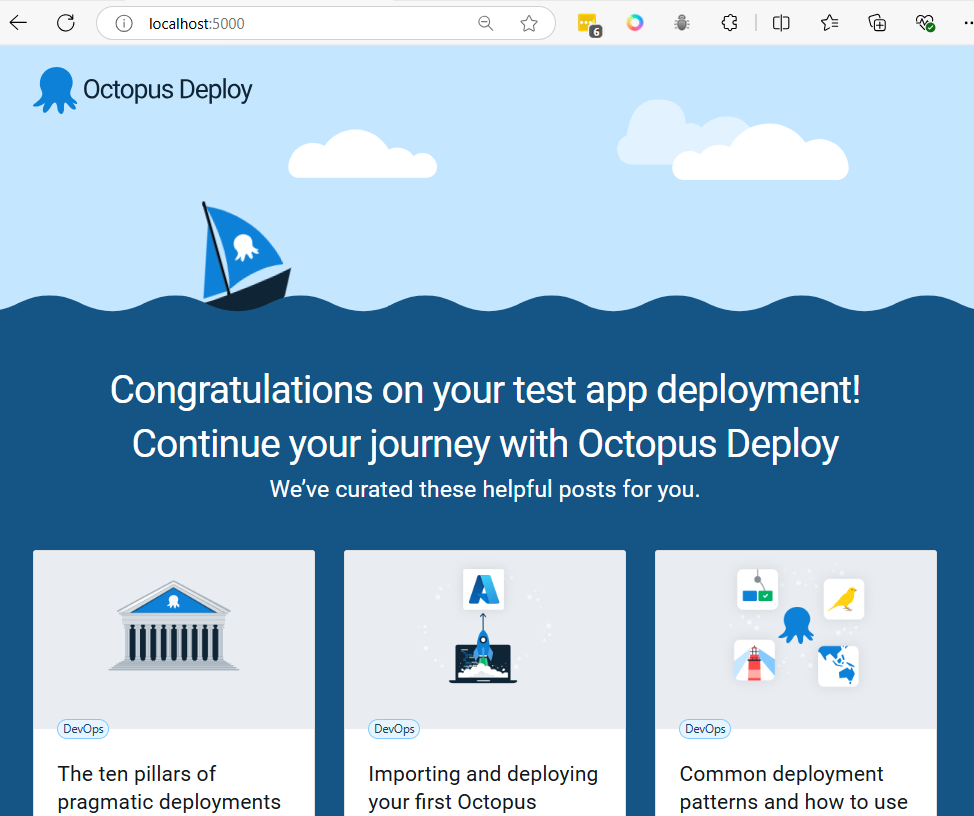

Access the applications:

Node.js app will be running at http://localhost:3000

Python app will be running at http://localhost:5000

Python app will be running at http://localhost:5000

Both applications will connect to the PostgreSQL database using the same credentials.

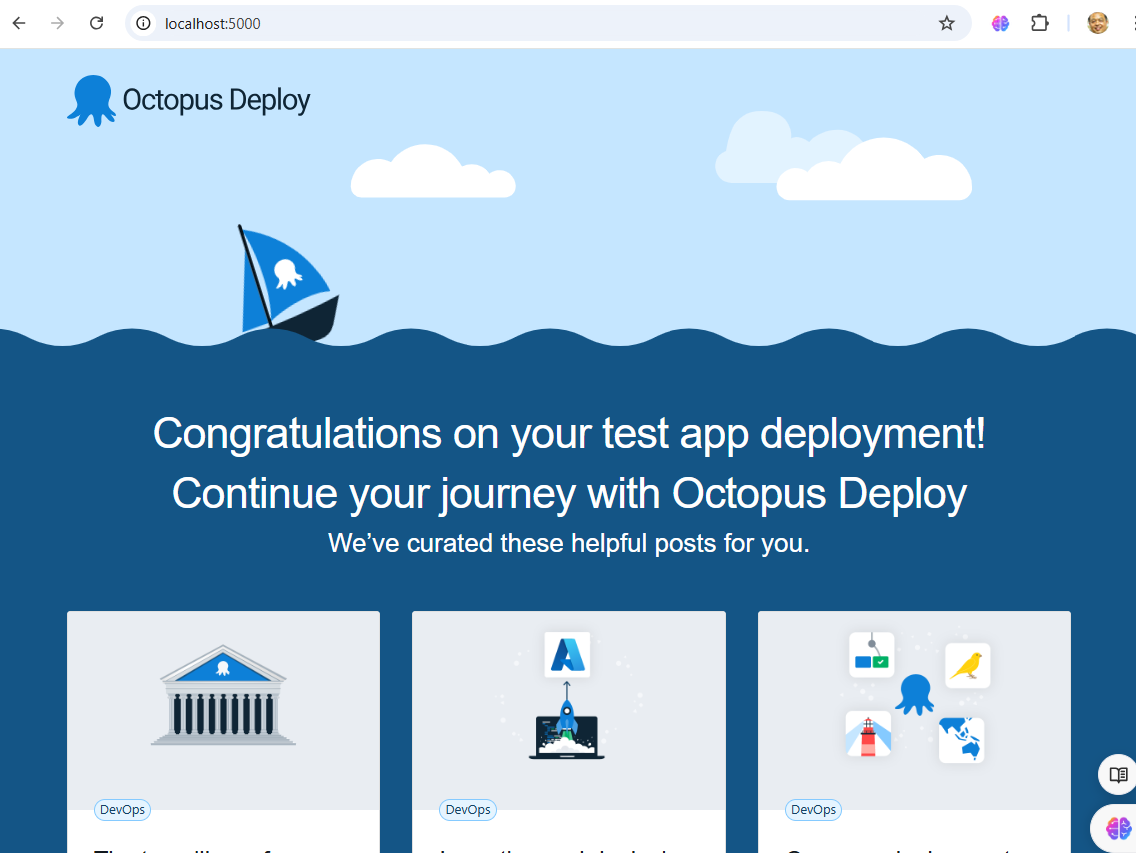

- Stop application go back to console

- control + c to stop

- run

docker compose down

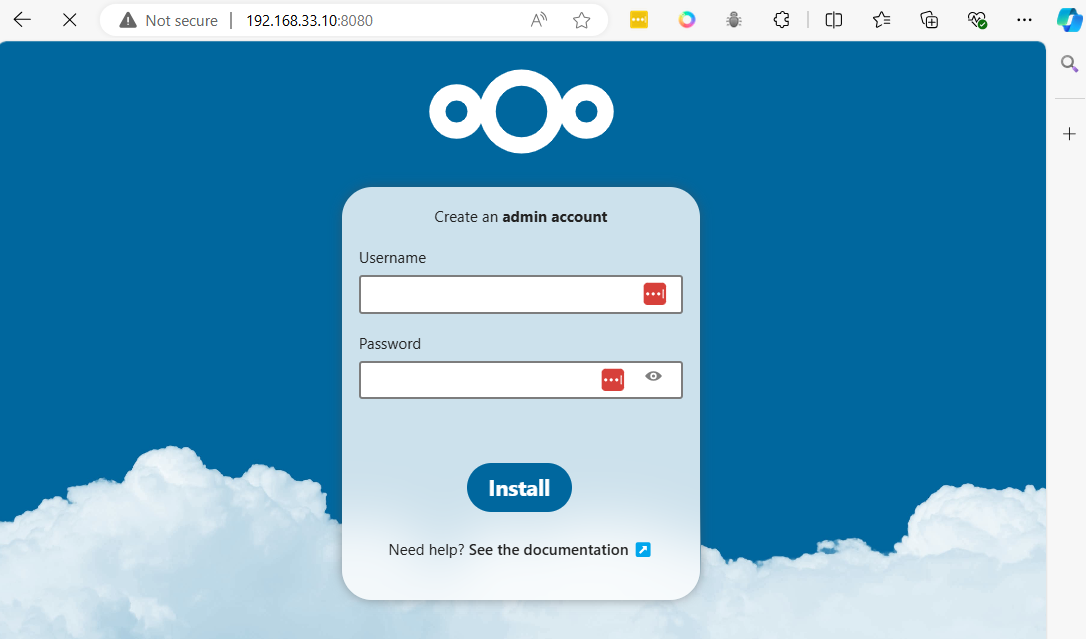

NextCloud Container

cd ~

mkdir nextcloud

cd nextcloud

cat <<EOF | tee docker-compose.yml

services:

nextcloud_db:

# This could be a specific version like mariadb:10.6

image: mariadb

restart: always

command: --transaction-isolation=READ-COMMITTED --binlog-format=ROW

networks:

- cloudnet

volumes:

- db:/var/lib/mysql

environment:

- MYSQL_RANDOM_ROOT_PASSWORD=true

- MYSQL_PASSWORD=PASSWORD

- MYSQL_DATABASE=nextcloud

- MYSQL_USER=nextcloud

nextcloud:

# This could be just 'nextcloud' to get the latest version

image: nextcloud:28-apache

restart: always

networks:

- cloudnet

ports:

- 8080:80

volumes:

- nextcloud:/var/www/html

environment:

- NEXTCLOUD_DATA_DIR=/var/www/html/data

- MYSQL_PASSWORD=PASSWORD-as-above

- MYSQL_DATABASE=nextcloud

- MYSQL_USER=nextcloud

- MYSQL_HOST=nextcloud_db

volumes:

nextcloud:

db:

networks:

cloudnet:

name: cloudnet

driver: bridge

EOF

cat docker-compose.yml

docker compose up -d

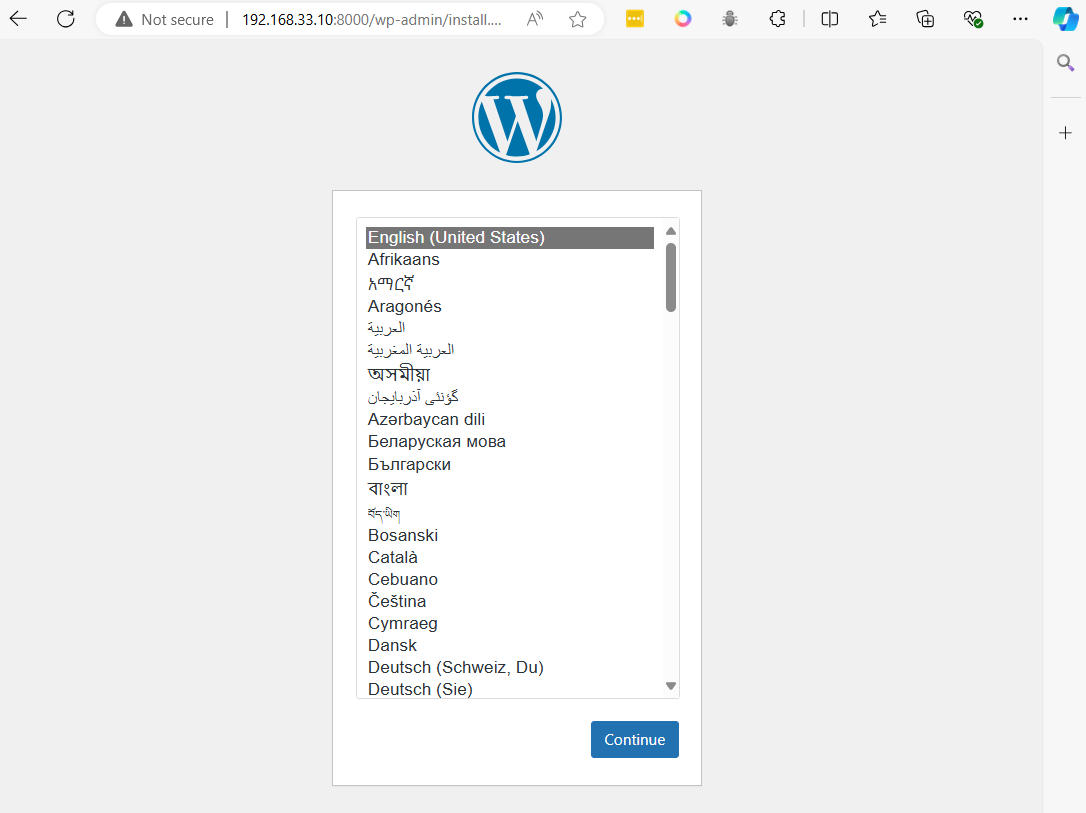

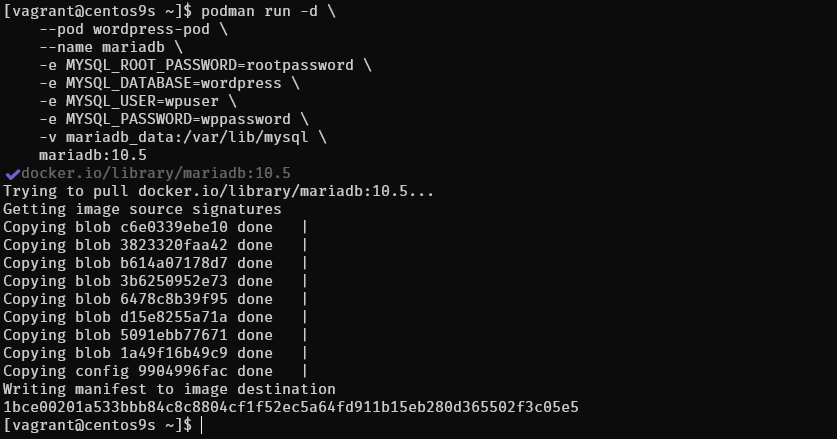

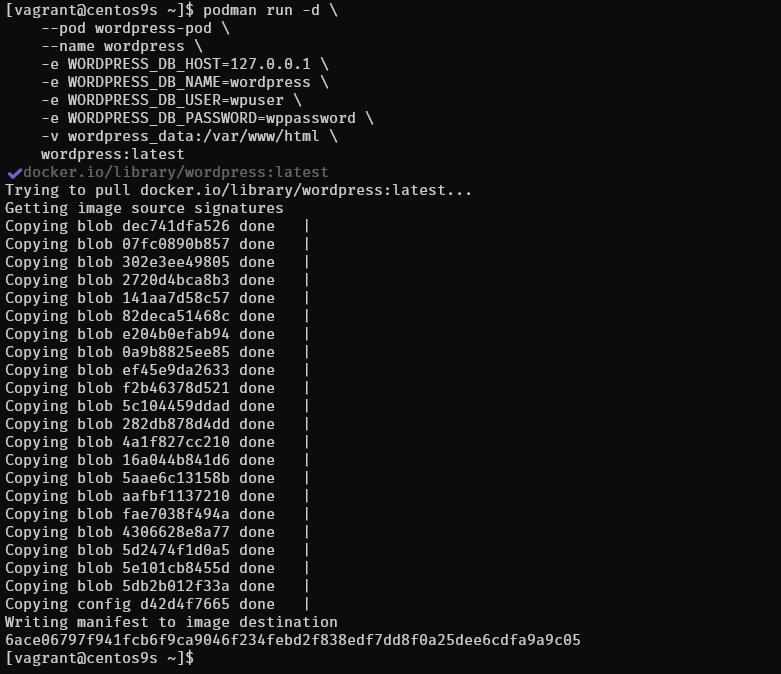

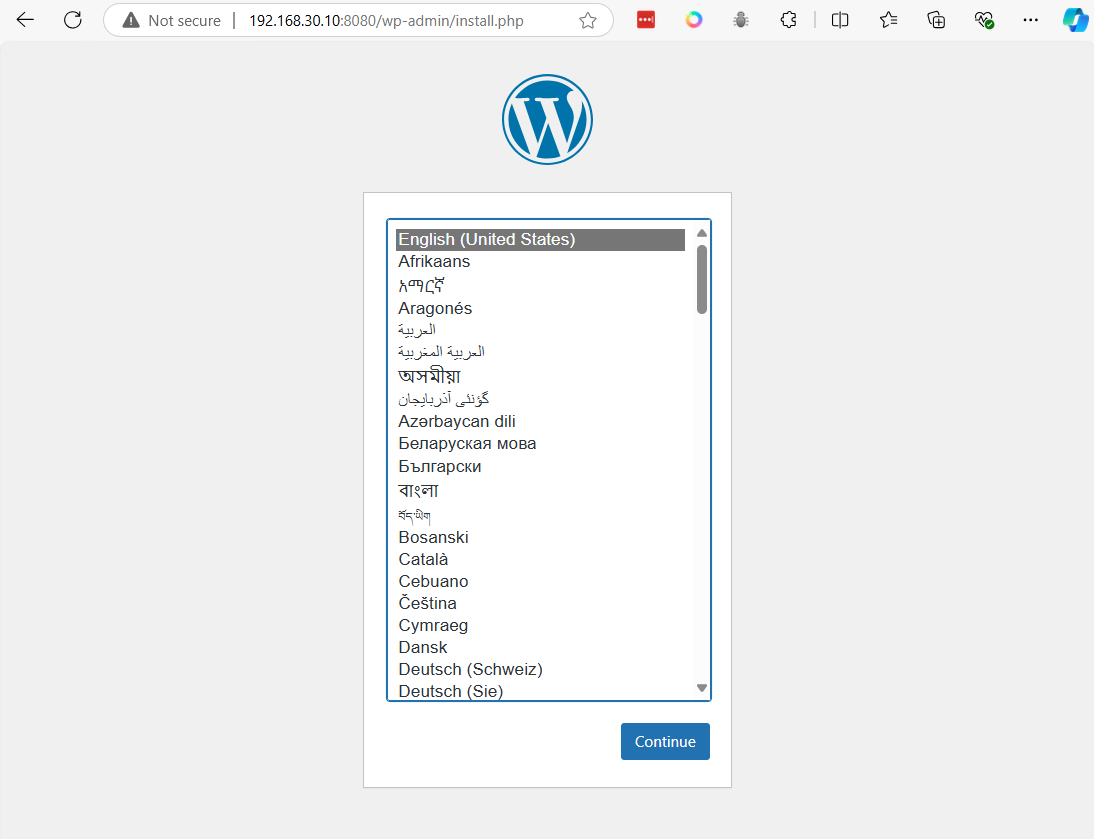

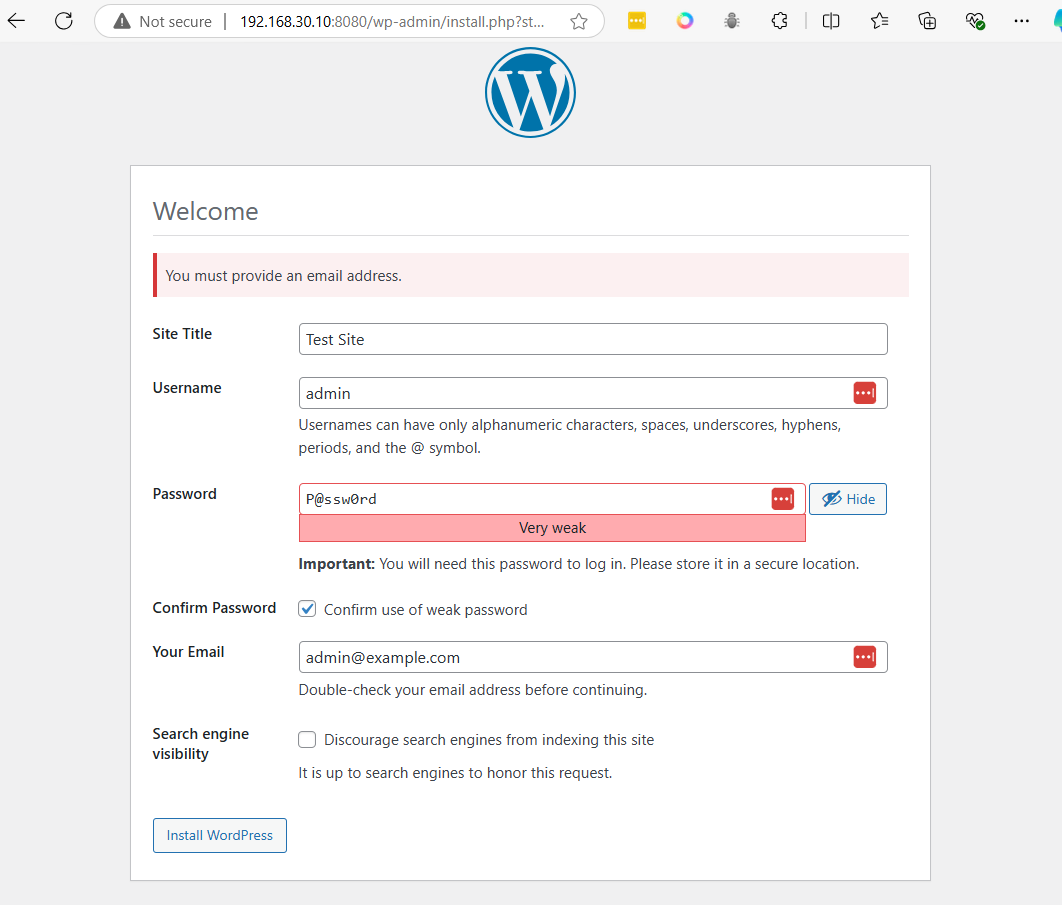

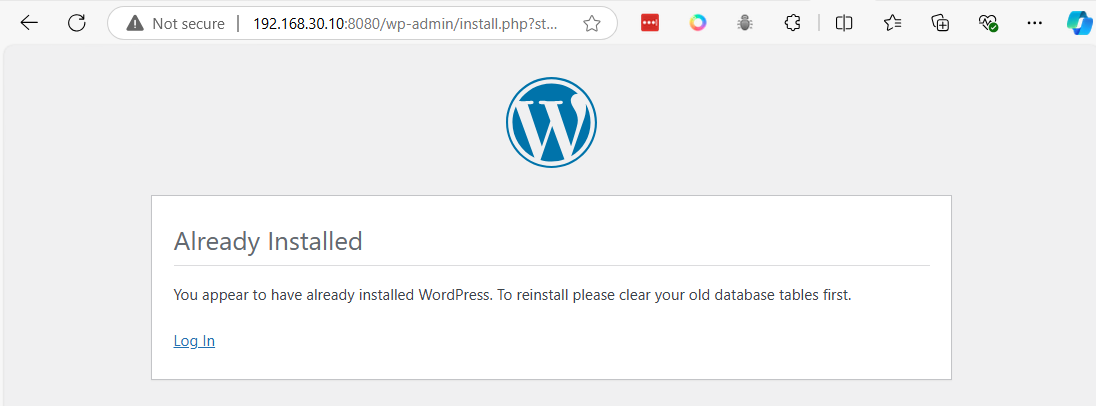

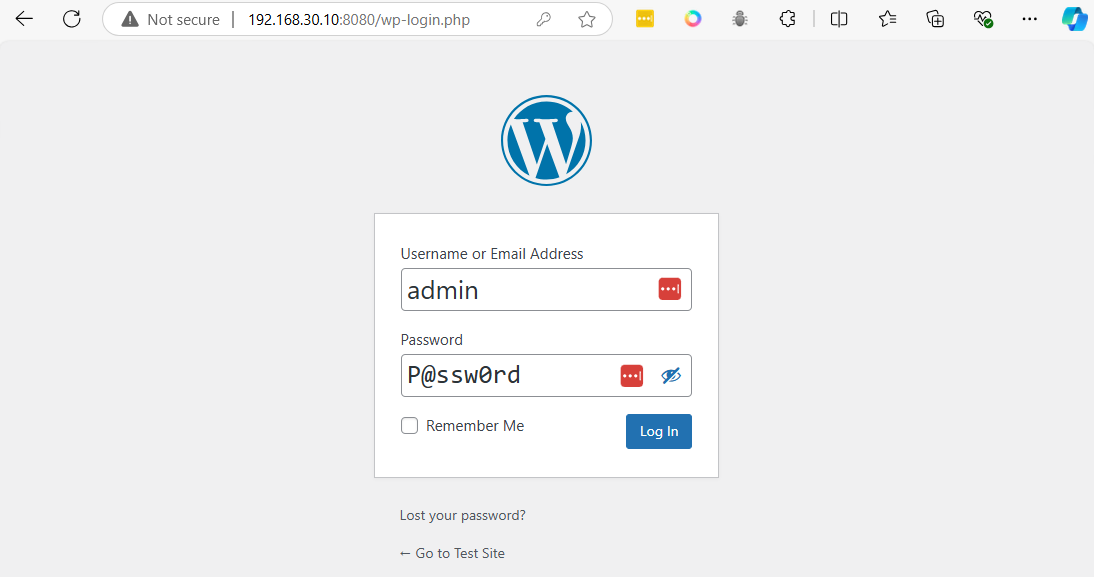

Wordpress Container

cd ~

mkdir wordpress

cd wordpress

Copy to terminial create docker-compose.yml

cat <<EOF | tee docker-compose.yml

services:

db:

image: mysql

volumes:

- db_data:/var/lib/mysql

restart: always

environment:

MYSQL_ROOT_PASSWORD: rootpassword

MYSQL_DATABASE: wordpress

MYSQL_USER: wordpress

MYSQL_PASSWORD: wordpress

wordpress:

depends_on:

- db

image: wordpress:latest

ports:

- "8000:80"

restart: always

environment:

WORDPRESS_DB_HOST: db:3306

WORDPRESS_DB_USER: wordpress

WORDPRESS_DB_PASSWORD: wordpress

WORDPRESS_DB_NAME: wordpress

volumes:

db_data:

EOF

cat docker-compose.yml

docker compose up -d

docker compose stop

docker compose down

python project

Common instructions Some of the most common instructions in a Dockerfile include:

FROM <image>- this specifies the base image that the build will extend.WORKDIR <path>- this instruction specifies the "working directory" or the path in the image where files will be copied and commands will be executed.COPY <host-path> <image-path>- this instruction tells the builder to copy files from the host and put them into the container image.RUN <command>- this instruction tells the builder to run the specified command.ENV <name> <value>- this instruction sets an environment variable that a running container will use.EXPOSE <port-number>- this instruction sets configuration on the image that indicates a port the image would like to expose.USER <user-or-uid>- this instruction sets the default user for all subsequent instructions.CMD ["<command>", "<arg1>"]- this instruction sets the default command a container using this image will run.

vagrant ssh to vim

vagrant ssh

check install python, pip

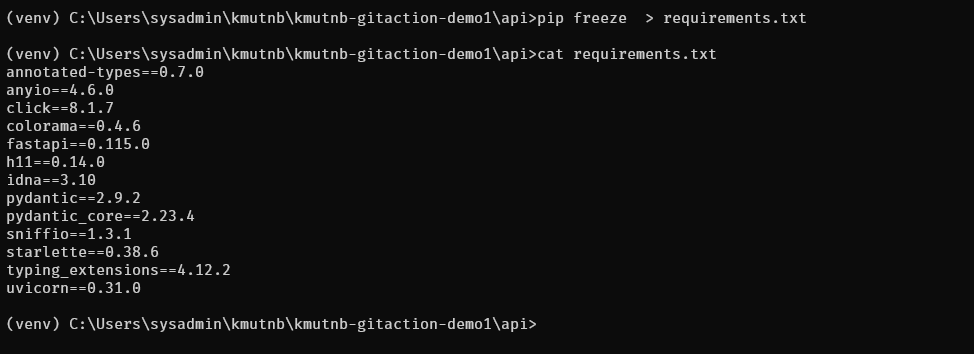

python --version

sudo dnf install python-pip

sudo dnf install tree

Create project

$ cd

$ mkdir week3_python

$ cd week3_python

$ mkdir src

$ python -m venv myenv

$ source myenv/bin/activate

(myenv) $ pip install flask

(myenv) $ pip freeze >> requirements.txt

(myenv) $ cat requirements.txt

- Create main.py

cat <<EOF | tee src/main.py

from flask import Flask

server = Flask(__name__)

@server.route("/")

def index():

return "Hello World!"

if __name__ == "__main__":

server.run(host='0.0.0.0')

EOF

- Test Application

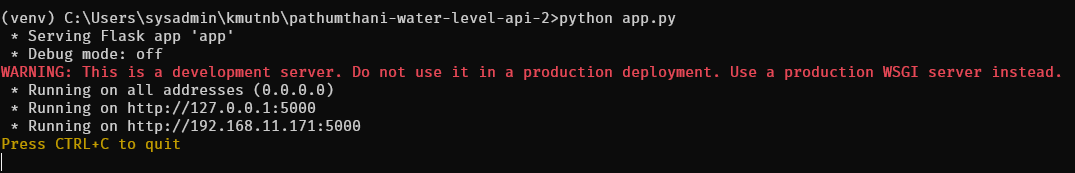

(myenv) $ python src/main.py

Result:

* Serving Flask app 'main'

* Debug mode: off

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:5000

* Running on http://10.0.2.15:5000

Press CTRL+C to quit

Exit project by CTRL+C

- Exit from python development

(myenv) $ deactivate

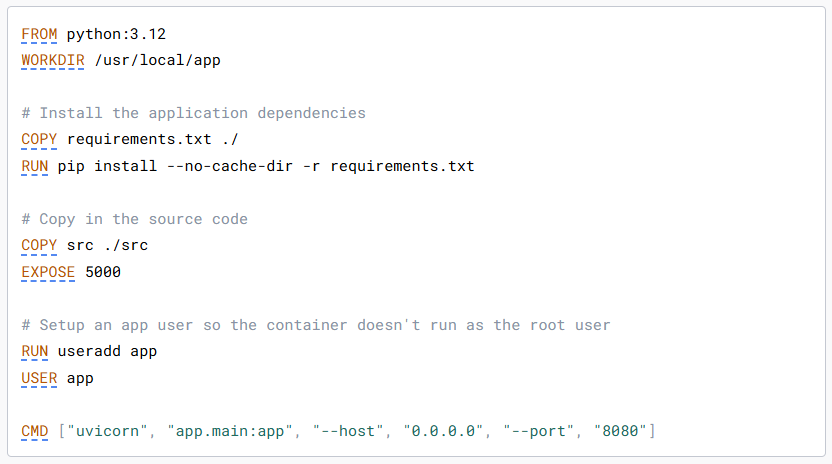

- Create Dockerfile with python image https://hub.docker.com/_/python

cat <<EOF | tee Dockerfile

FROM python

WORKDIR /code

COPY requirements.txt .

RUN pip install --no-cache-dir -r requirements.txt

# Copy in the source code

COPY src/ .

EXPOSE 5000

# Setup an app user so the container doesn't run as the root user

RUN useradd -m app

USER app

CMD ["python", "main.py"]

EOF

- Project Structure

$ tree -L 2 .

.

├── Dockerfile

├── myenv

│ ├── bin

│ ├── include

│ ├── lib

│ ├── lib64 -> lib

│ └── pyvenv.cfg

├── requirements.txt

└── src

└── main.py

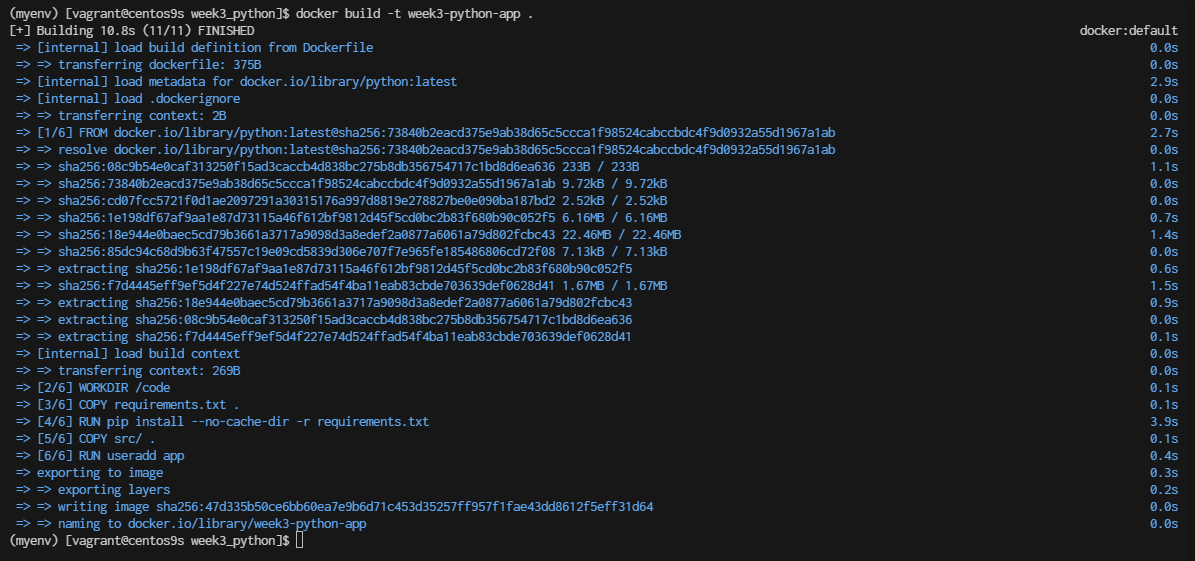

- Create Image

docker build -t week3-python-app .

- Check image

$ docker images

$ docker run -it week3-python-app bash

app@12ec39dcd0fc:/code$ ls

main.py requirements.txt

- Run docker container

$ docker run -d -p 5000:5000 --name week3-app week3-python-app

f9bb95ba424167586ad36d54a8b7a9bfc643e6515efb8328d915c84584914c74

$ ss -tulpn | grep 5000

tcp LISTEN 0 4096 0.0.0.0:5000 0.0.0.0:*

- monitor log

$ docker logs week3-app

Result:

* Serving Flask app 'main'

* Debug mode: off

WARNING: This is a development server. Do not use it in a production deployment. Use a production WSGI server instead.

* Running on all addresses (0.0.0.0)

* Running on http://127.0.0.1:5000

* Running on http://172.17.0.2:5000

Press CTRL+C to quit

- stop container

$ docker container stop wee3-app

$ docker rm week3-app

- Create Docker compose

cat <<EOF | tee docker-compose.yml

services:

week3-app:

container_name: week3-app-compose

image: myreponame/week3-python-app:latest

ports:

- "5000:5000"

build:

context: .

dockerfile: Dockerfile

EOF

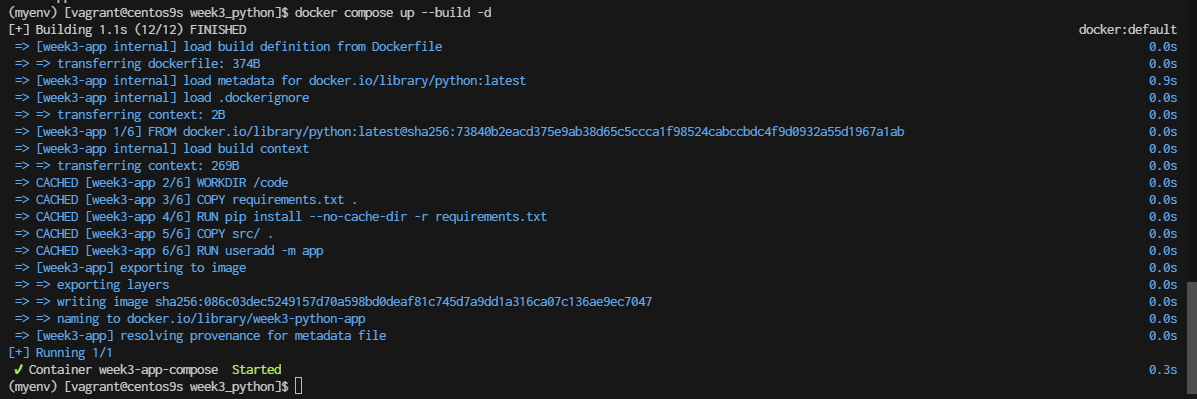

- Verify Build and Run:

Make sure the Dockerfile builds successfully by running:

docker compose up --build -d

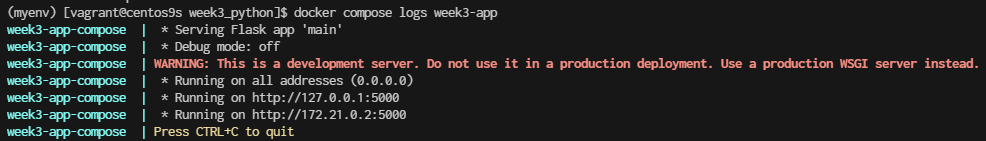

- Check Logs: with service name. if container stops

$ docker compose logs week3-app

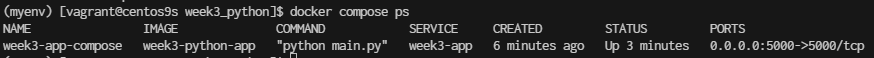

- list docker compose

$ docker compose ps

- Delete images

$ docker rmi week3-python-app:latest

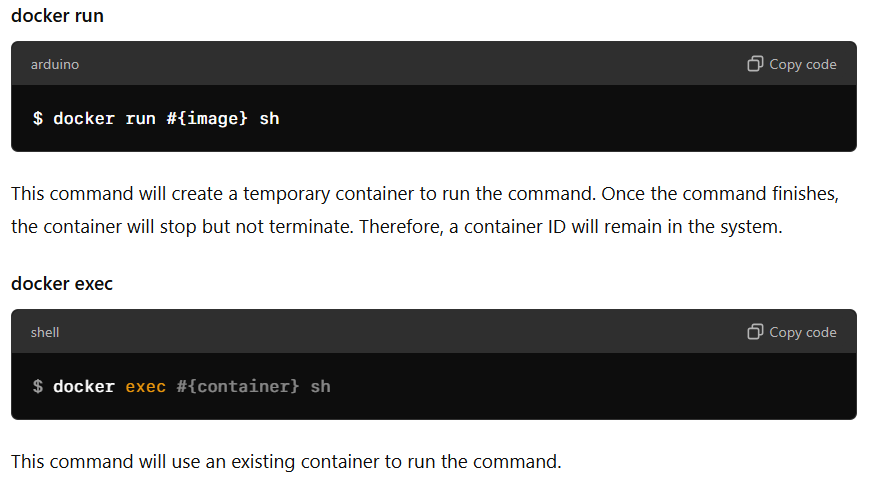

Compare Run and Exec

- Stop Docker compose before process

$ docker compose down

Run another python App

cat <<EOF | tee src/main2.py

from flask import Flask, request, jsonify

app = Flask(__name__)

@app.route("/greet", methods=["GET"])

def greet():

return "Hello! Welcome to the API."

@app.route("/echo", methods=["POST"])

def echo():

data = request.get_json()

return jsonify(data)

@app.route("/hello/<name>", methods=["GET"])

def hello(name):

return f"Hello, {name}!"

if __name__ == "__main__":

app.run(host='0.0.0.0', port=5000)

EOF

- enable firewall port 5000

sudo firewall-cmd --permanent --add-port=5000/tcp

sudo firewall-cmd --reload

$ python src/main2.py

1 Test

curl http://192.168.33.10:5000/greet

Hello! Welcome to the API.

2 Test

curl -X POST http://192.168.33.10:5000/echo -H "Content-Type: application/json" -d '{"key": "1234"}'

Express.js project

Prerequisites

- Create project folder

cd

mkdir express

cd express

- install node js package

sudo dnf install nodejs

- Step 1: Setting up the Node.js and Express.js Application

Create a simple Node.js and Express.js application. Create a file named app.js and add the following code:

cat <<EOF | tee app.js

const express = require("express");

const app = express();

app.get("/", function(req, res) {

return res.send("Hello World");

});

app.listen(3000, function(){

console.log('Listening on port 3000');

});

EOF

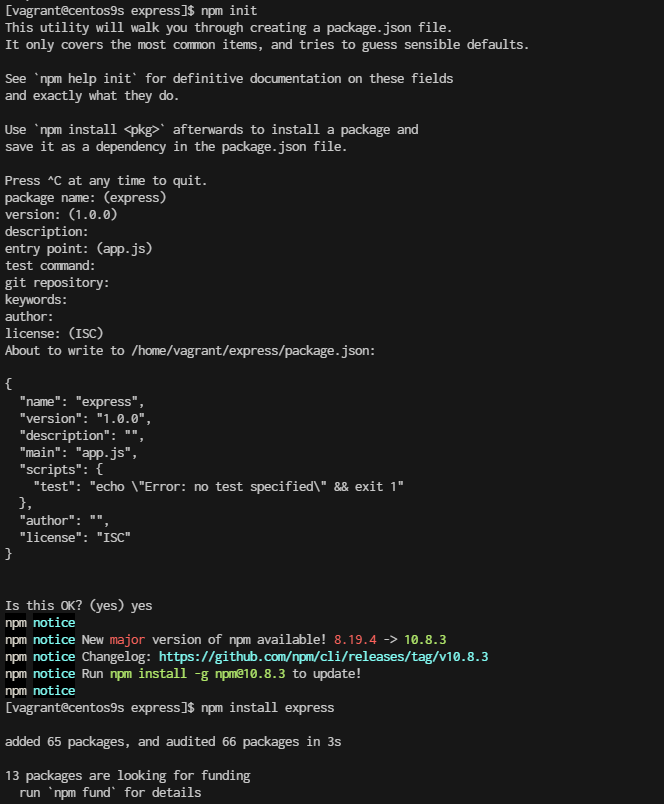

$ npm init

$ npm install express

$ node app.js

Listening on port 3000

Express server code looks good! Here's a brief rundown of what each part does:

-

Import Express: const express = require("express"); imports the Express library.

-

Create an Express App: const app = express(); creates an instance of an Express application.

-

Define a Route: app.get("/", function(req, res) { return res.send("Hello World"); }); sets up a route for the root URL (/). When someone accesses this URL, the server will respond with "Hello World".

-

Start the Server: app.listen(3000, function(){ console.log('Listening on port 3000'); }); tells the application to listen on port 3000 and logs a message to the console when the server is running.

- Step 2: Create a Dockerfile

[vagrant@centos9s express]$ ls -l

total 64

-rw-r--r--. 1 vagrant vagrant 211 Sep 12 01:12 app.js

-rw-r--r--. 1 vagrant vagrant 106 Sep 12 01:32 Dockerfile

drwxr-xr-x. 66 vagrant vagrant 4096 Sep 12 01:18 node_modules

-rw-r--r--. 1 vagrant vagrant 251 Sep 12 01:18 package.json

-rw-r--r--. 1 vagrant vagrant 46645 Sep 12 01:18 package-lock.json

Next, create a Dockerfile to specify how to build our Docker image. Create a file named Dockerfile in the same directory as your app.js file and add the following content:

- Create Dockerfile

cat <<EOF | tee Dockerfile

FROM node:22-alpine

# Set the working directory in the container

WORKDIR /app

# Copy package.json and package-lock.json if available

COPY package.json package-lock.json ./

# Install dependencies

RUN npm install

# Copy the rest of the application code

COPY . .

# Expose the port that the app runs on

EXPOSE 3000

# Command to run the application

CMD ["node", "app.js"]

EOF

- create ```.dockerignore`` Consider adding a .dockerignore file to avoid including unnecessary files in the Docker image:

cat <<EOF | tee .dockerignore

node_modules

npm-debug.log

Dockerfile

.dockerignore

.git

EOF

- Step 3: Building the Docker Image Now that we have our Dockerfile ready, let’s build the Docker image. Open a terminal, navigate to the directory containing your Dockerfile, and run the following command:

$ docker build -t week3_node-application .

- Step 4: Run Docker Container

$ docker run -p 3000:3000 week3_node-application

- Remove image

$ docker stop $(docker ps -a -q)

$ docker rm $(docker pa -a -q)

- Step5 Create Docker Compose

Docker Compose with your Node.js application, you’ll need to create a docker-compose.yml file. This file allows you to define and run multi-container Docker applications. Since your application is a single container application

cat <<EOF | tee docker-compose.yml

version: '3.8'

services:

app:

image: my-node-app

build:

context: .

dockerfile: Dockerfile

ports:

- "3000:3000"

volumes:

- .:/app

environment:

NODE_ENV: development

EOF

-

services: This section defines the services (containers) for your application.

-

app: This is the name of the service. You can name this whatever you like.

-

image: my-node-app: This specifies the Docker image to use. You can either build it yourself or pull it from a repository. In this case, it assumes you will build it using Docker Compose.

-

build: This section is used to build the Docker image. context: . indicates the directory where the Dockerfile is located. dockerfile: Dockerfile specifies the name of the Dockerfile (it defaults to Dockerfile if not specified).

-

ports: This maps port 3000 on your host machine to port 3000 in the container, allowing you to access the application via http://localhost:3000.

-

volumes: This mounts your project directory (.) to /app in the container. This is useful for development as it allows you to see changes in real time without rebuilding the image. For production, you might want to omit this to use the image as-is.

-

environment: This sets environment variables for your container. Here, NODE_ENV is set to development.

-

Building and Running with Docker Compose

To build and start your application using Docker Compose:

- Build and Start Services:

docker-compose up --build

This command builds the Docker image (if not already built) and starts the container as specified in your docker-compose.yml.

- Stop Services:

docker-compose down

This command stops and removes the containers defined in your docker-compose.yml file.

Why Docker Compose

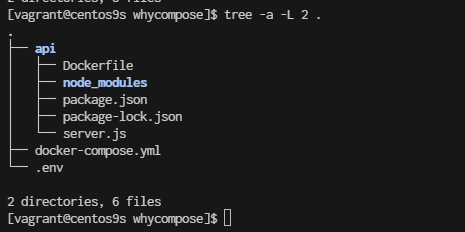

- Structure Folder

cd

mkdir whycompose

cd whycompose

mkdir api

cd api

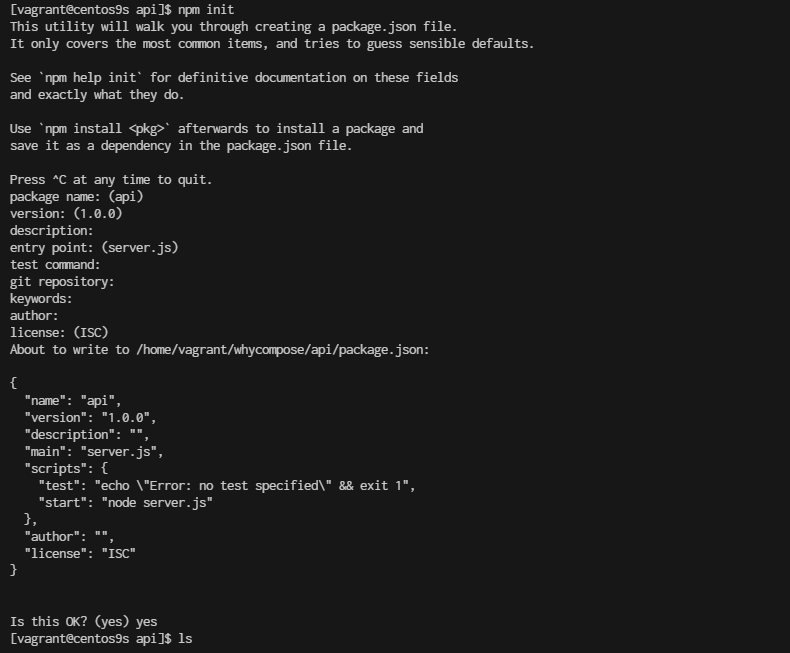

npm init

- Create project

- create file server.js

vim server.js

const express = require('express');

const cors = require('cors');

const { MongoClient } = require('mongodb');

const bodyParser = require('body-parser');

const app = express();

const mongoClientOptions = { useNewUrlParser: true, useUnifiedTopology: true };

const databaseName = 'my-db';

const port = 3000;

app.use(cors());

app.use(bodyParser.urlencoded({ extended: true }));

app.use(bodyParser.json());

app.post('/add-user', async (req, res) => {

const userObj = req.body;

const dbUrl = process.env.DB_URL;

try {

const client = await MongoClient.connect(dbUrl, mongoClientOptions);

const db = client.db(databaseName);

// Define the newvalues object with $set operator

const newvalues = { $set: userObj };

// Update or insert the document

const result = await db.collection('users').updateOne(

{ userName: userObj.userName }, // Query to find the document

newvalues, // Update the document

{ upsert: true } // Create the document if it does not exist

);

client.close();

// Send success response

res.status(200).json({ message: 'User updated or added successfully', result });

} catch (err) {

console.error('Error updating user:', err);

res.status(500).send('Internal Server Error');

}

});

app.get('/get-user', async (req, res) => {

const query = req.query;

const dbUrl = process.env.DB_URL;

try {

const client = await MongoClient.connect(dbUrl, mongoClientOptions);

const db = client.db(databaseName);

const result = await db.collection('users').findOne(query);

client.close();

// Send user data or empty object

res.status(200).json(result || {});

} catch (err) {

console.error('Error fetching user:', err);

res.status(500).send('Internal Server Error');

}

});

app.listen(port, () => {

console.log(`App listening on port ${port}!`);

});

Install the Required Packages

Next, you'll need to install the required npm packages. Based on your server.js file, you need the following packages:

- express: A web framework for Node.js.

- cors: A package for enabling Cross-Origin Resource Sharing (CORS).

- mongodb: The MongoDB driver for Node.js.

- body-parser: Middleware for parsing request bodies.

$ npm install express cors mongodb body-parser

- Create Dockerfile

cat <<EOF | tee Dockerfile

FROM node:22-alpine

# Import a Nodejs image that runs on top of an Alpine image.

RUN mkdir -p /home/app

# This command will create a subdirectory called /app in the /home directory of the Alpine image

WORKDIR /home/app

# This command will set the default directory as /home/app.

# Hence, the next commands will start executing from the /home/app directory of the Alpine image.

COPY package*.json ./

# To copy both package.json and package-lock.json to the working directory (/home/app) of the Alpine image.

# Prior to copying the entire current working directory, we copy the package.json file to the working directory (/home/app) of the Alpine image. This allows to take advantage of any cached layers.

RUN npm install

# This will create a node_modules folder in /home/app and

# install all the dependencies specified in the package.json file.

COPY . .

# Here “.” represents the current working directory.

# This command will copy all the files in the current directory to the working directory (/home/app) of the Alpine image.

EXPOSE 3000

# Make the application available on port 3000. By doing this, you can access the Nodejs application via port 3000.

CMD ["npm", "start"]

# One important thing to notice here is that “RUN” executes while the image creation process is running

# and “CMD” executes only after the image creation process is finished.

# One Dockerfile may consist of more than one "RUN" command, but it can only consist of one "CMD" command.

EOF

- create .cokerignore

cat <<EOF | tee .dockerignore

node_modules

EOF

Go back to whycompose

cd whycompose

- create docker-compose.yml

cat <<EOF | tee docker-compose.yml

services:

api:

build:

context: ./api

dockerfile: Dockerfile

ports:

- "3000:3000"

environment:

DB_URL: ${DB_URL}

networks:

- my-network

depends_on:

- mongodb

healthcheck:

test: ["CMD-SHELL", "curl -f http://localhost:3000/health || exit 1"]

interval: 30s

retries: 3

start_period: 30s

timeout: 10s

restart: unless-stopped

mongodb:

image: mongo:latest

ports:

- "27017:27017"

environment:

MONGO_INITDB_ROOT_USERNAME: ${MONGO_INITDB_ROOT_USERNAME}

MONGO_INITDB_ROOT_PASSWORD: ${MONGO_INITDB_ROOT_PASSWORD}

volumes:

- mongo-data:/data/db

networks:

- my-network

healthcheck:

test: ["CMD-SHELL", "mongo --eval 'db.runCommand({ connectionStatus: 1 })' || exit 1"]

interval: 30s

retries: 3

start_period: 30s

timeout: 10s

restart: unless-stopped

volumes:

mongo-data:

driver: local

networks:

my-network:

driver: bridge

EOF

Understand: docker compose

- build Configuration:

- Explicitly define context and dockerfile in the build section for clarity.

- Environment Variables:

- Use the : syntax for environment variables which is more readable and aligns with docker-compose conventions.

- Health Checks:

- Updated the health checks to ensure both services are properly monitored. The api service now checks a /health endpoint to verify it's up and running, while the mongodb service checks the connection status.

- Dependencies:

- Added depends_on to the api service to ensure MongoDB starts before the API service. Note: depends_on does not wait for MongoDB to be "ready" but ensures it starts before the API.

- Restart Policy:

- Added restart: unless-stopped to ensure services are automatically restarted unless explicitly stopped. This is useful for resilience.

- Networking:

- Defined a custom network my-network with the bridge driver for better isolation and management of network traffic.

Additional Considerations:

- .env File: Ensure your .env file is in place with appropriate variables:

cat <<EOF | tee .env

DB_URL=mongodb://mongodb:27017/mydatabase

MONGO_INITDB_ROOT_USERNAME=yourusername

MONGO_INITDB_ROOT_PASSWORD=yourpassword

EOF

-

Dockerfile for API: Make sure your Dockerfile in the ./api directory is properly set up for building your application.

-

Security: Be cautious with sensitive information and consider using secrets management tools for production environments.

-

Volume Management: Regularly monitor and manage your volumes to avoid excessive disk usage.

-

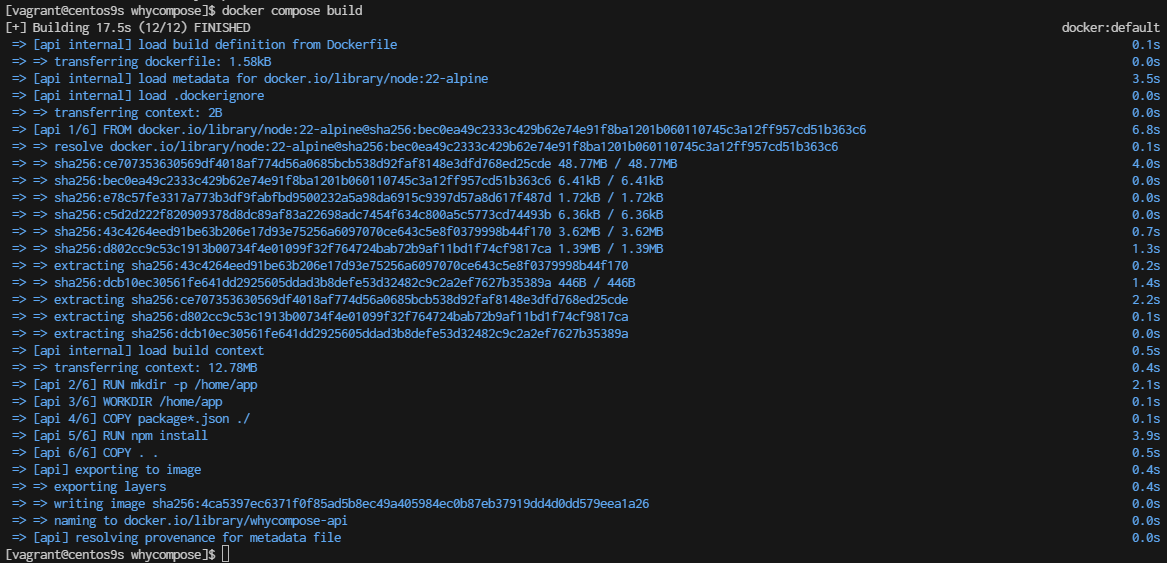

Build the Docker image

whycompose]$ docker compose build --no-cache

- Check docker image

whycompose]$ docker images

REPOSITORY TAG IMAGE ID CREATED SIZE

whycompose-api latest 4ca5397ec637 2 minutes ago 181MB

- Docker compose up

whycompose]$ docker compose up

Summary docker command

When using Docker Compose and you want to force a rebuild of your services, even if Docker thinks the current images are up-to-date, you can use several options. These methods ensure that Docker Compose does not use cached layers and rebuilds everything from scratch.

1. Use the --no-cache Option

The --no-cache flag can be used with docker-compose build to force Docker to rebuild the images without using cache:

docker-compose build --no-cache

- --no-cache: Ignores the cache and builds each step of the Dockerfile from scratch.

2. Use the --build Flag with docker-compose up

You can also force a rebuild by using the --build flag when running docker-compose up. This will rebuild the images before starting the containers:

docker-compose up --build

- --build: Forces the build of images before starting the containers.

3. Remove Existing Images If you want to ensure that old images are not used, you can manually remove them before rebuilding. You can list and remove the images using the following commands:

# List images

docker images

# Remove an image

docker rmi <image_id>

Alternatively, you can use Docker Compose to remove images related to your project:

docker-compose down --rmi all

- --rmi all: Removes all images used by the services defined in the docker-compose.yml file.

- Clean Up Build Cache To clean up build cache that might interfere with forcing a rebuild, you can use the following command:

docker builder prune

- docker builder prune: Cleans up the build cache. You can add -a to remove all unused build cache, not just dangling cache.

- Rebuild with docker-compose and --pull If you also want to make sure you pull the latest versions of the base images, you can use --pull:

docker-compose build --pull --no-cache

- --pull: Always attempt to pull a newer version of the base image.

- --no-cache: Ignores the cache and builds from scratch.

Summary

To force a rebuild of your Docker Compose services:

- Ignore Cache: Use docker-compose build --no-cache.

- Rebuild and Start: Use docker-compose up --build.

- Remove Images: Use docker-compose down --rmi all or manually remove images.

- Clean Build Cache: Use docker builder prune.

- Pull Latest Images: Use docker-compose build --pull --no-cache.

These options give you flexibility depending on whether you want to rebuild from scratch, update base images, or clean up old images and cache.

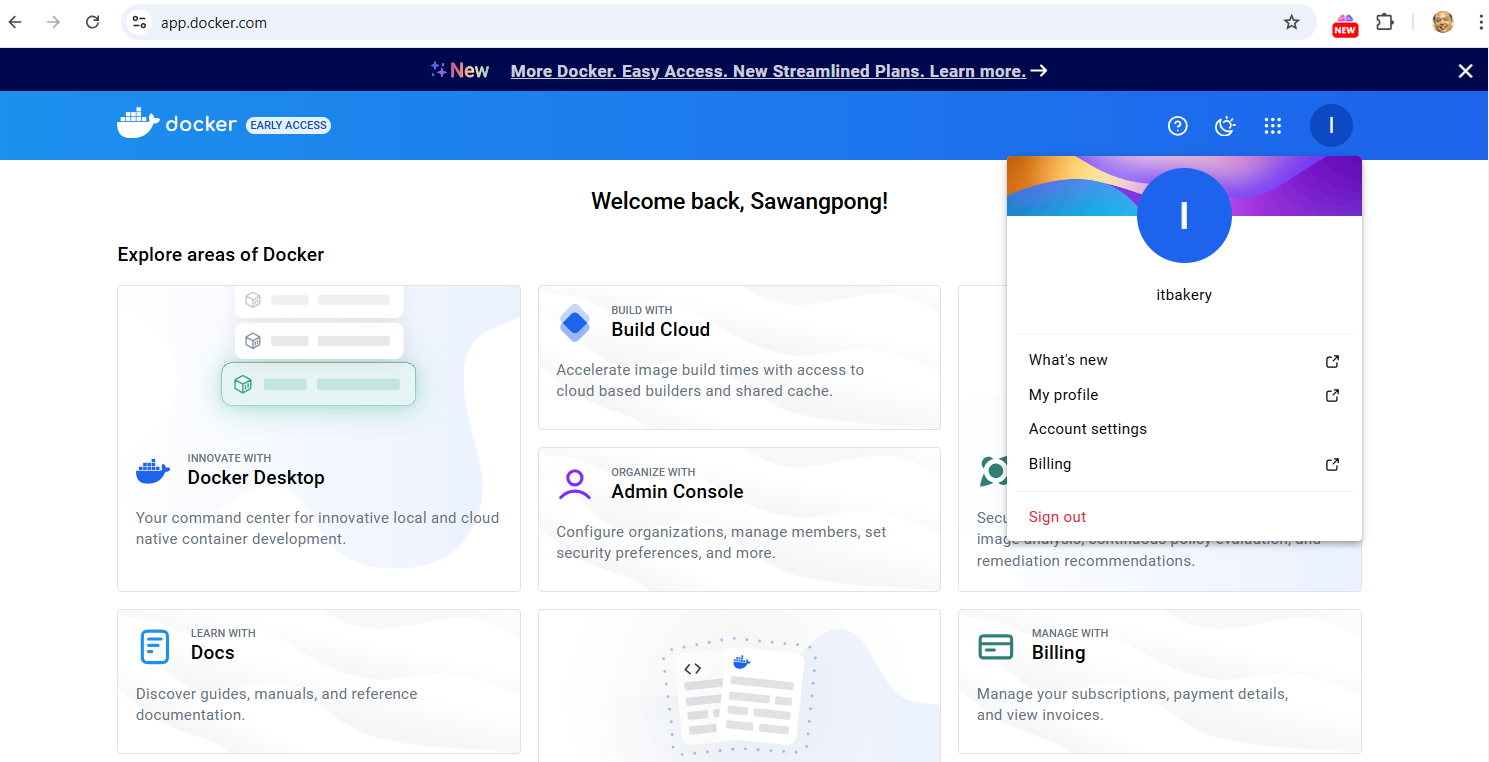

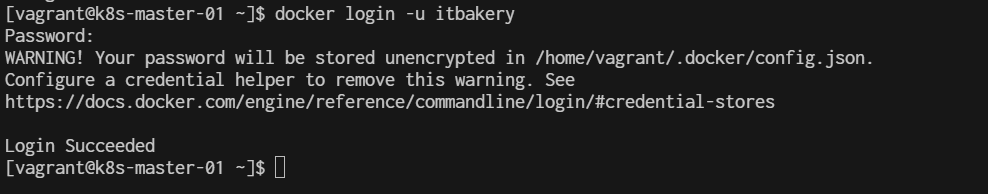

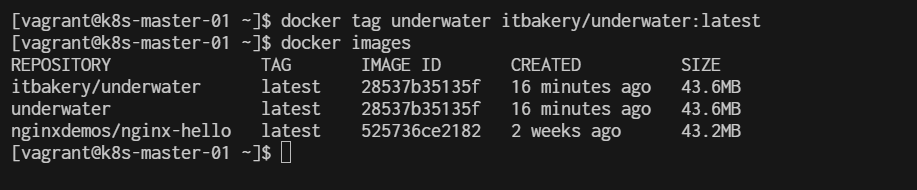

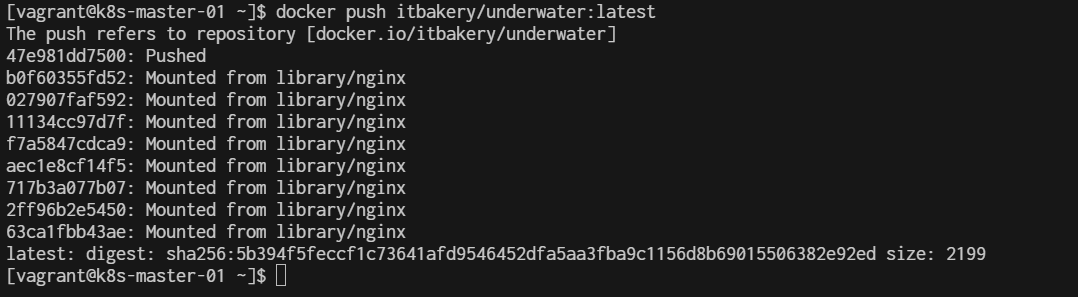

Docker push/pull

Docker Lab push pull

Install Docker: Make sure Docker is installed on your machine. You can follow the official installation guide for your operating system.

Create a Simple Dockerfile: Create a directory for your Docker project and add a Dockerfile. This file will define the image you want to build.

mkdir my-docker-lab

cd my-docker-lab

Create a file named Dockerfile in this directory with the following content:

Dockerfile

cat <<EOF | tee Dockerfile

# Use an official Python runtime as a parent image

FROM python

# Set the working directory in the container

WORKDIR /usr/src/app

# Copy the current directory contents into the container at /usr/src/app

COPY . .

# Install any needed packages specified in requirements.txt

RUN pip install --no-cache-dir -r requirements.txt

# Make port 80 available to the world outside this container

EXPOSE 80

# Define environment variable

ENV NAME World

# Run app.py when the container launches

CMD ["python", "app.py"]

EOF

Create a Requirements File: Create a requirements.txt file to specify Python dependencies:

cat <<EOF | tee requirements.txt

Flask

EOF

Create a Simple Python Application: Create a file named app.py:

cat <<EOF | tee app.py

from flask import Flask

app = Flask(__name__)

@app.route('/')

def hello_world():

return 'Hello, World!'

if __name__ == '__main__':

app.run(host='0.0.0.0', port=80)

EOF

Build Your Docker Image: From the directory containing your Dockerfile, build the Docker image:

$ docker build -t my-flask-app .

Run Your Docker Container: After building the image, run a container based on this image:

$ docker run -p 4000:80 my-flask-app

Your Flask app should now be accessible at http://localhost:4000.

ss -tulpn | grep 4000

curl http://localhost:4000

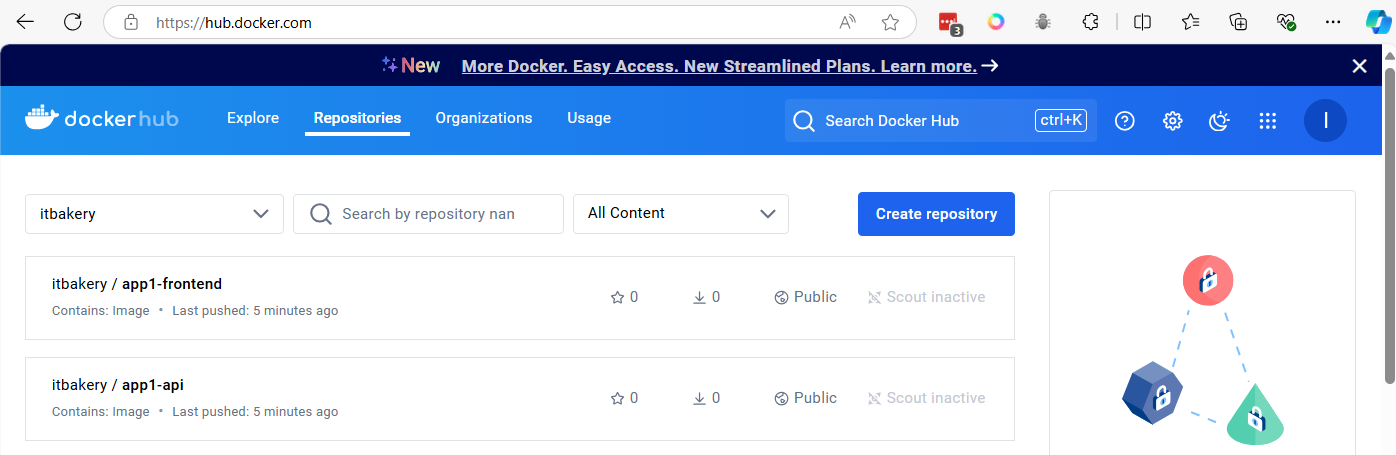

Docker Push and Pull Commands To push and pull Docker images to and from a Docker registry (e.g., Docker Hub), follow these steps:

Tag Your Image: Before pushing an image to Docker Hub, you need to tag it with your repository name. If your Docker Hub username is yourusername and your image name is my-flask-app, tag it like this:

docker tag my-flask-app yourrreponame/my-flask-app:latest

Login to Docker Hub: Log in to Docker Hub using your credentials:

$ docker login

Push the Image to Docker Hub: Push the tagged image to Docker Hub:

$ docker push yourrreponame/my-flask-app:latest

Pull the Image from Docker Hub: To pull the image from Docker Hub to another machine, use:

$ docker pull yourusername/my-flask-app:latest

Run the Pulled Image: After pulling the image, you can run it just like any other Docker image:

$ docker run -p 4000:80 yourusername/my-flask-app:latest

This will pull the image from Docker Hub and run it locally, making your Flask app accessible at http://localhost:4000.

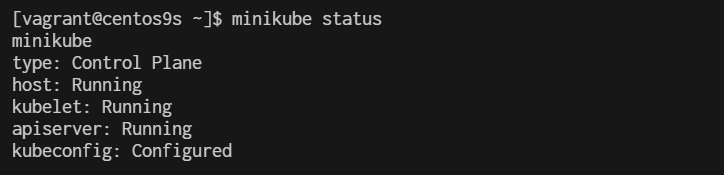

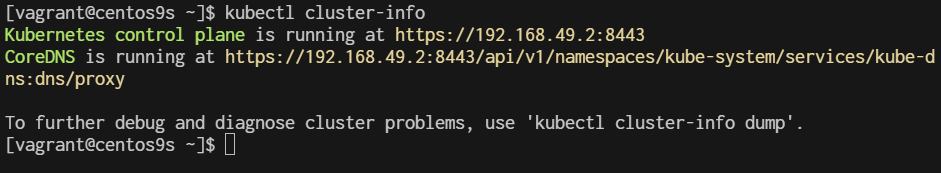

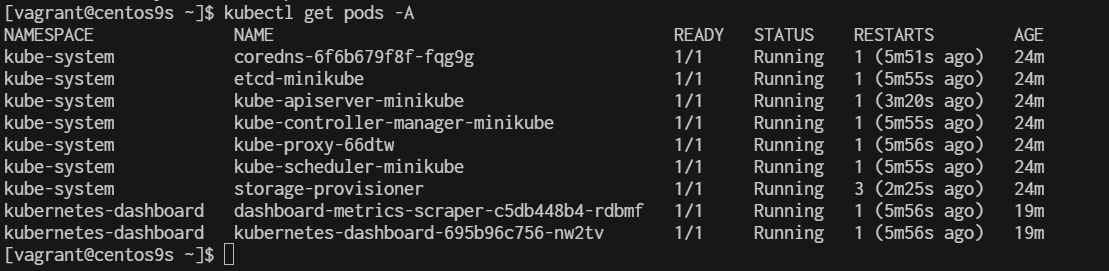

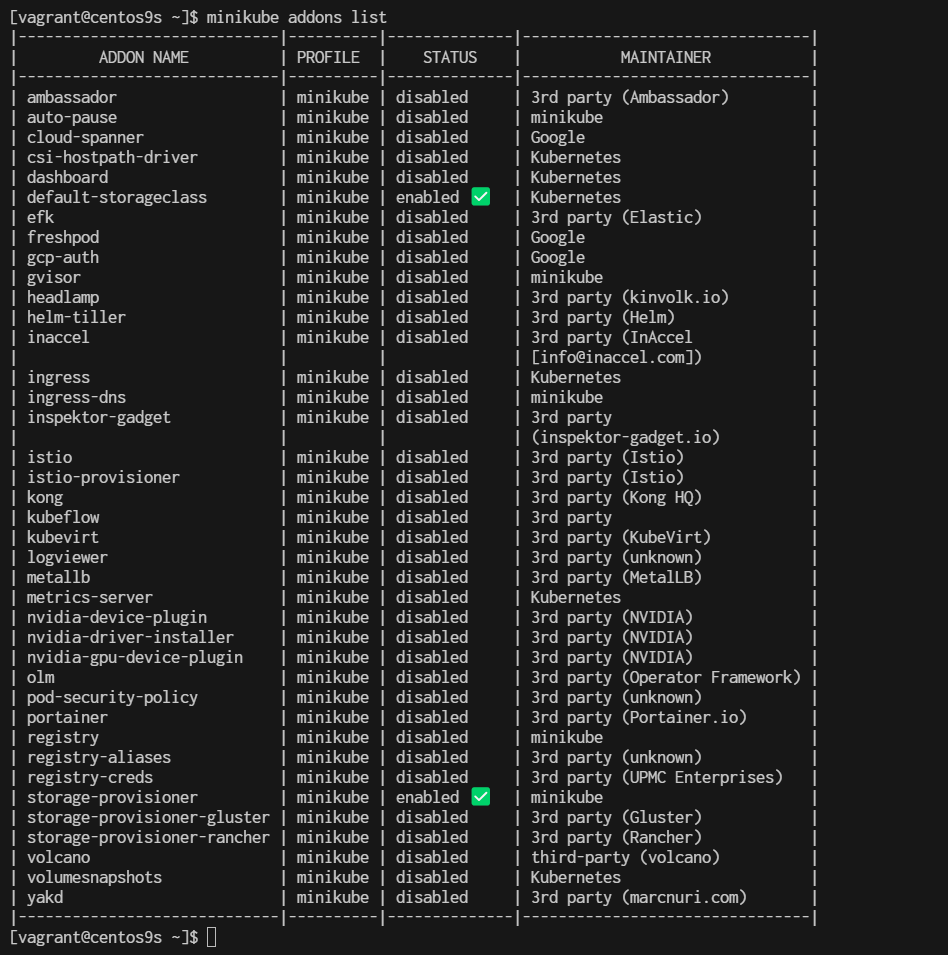

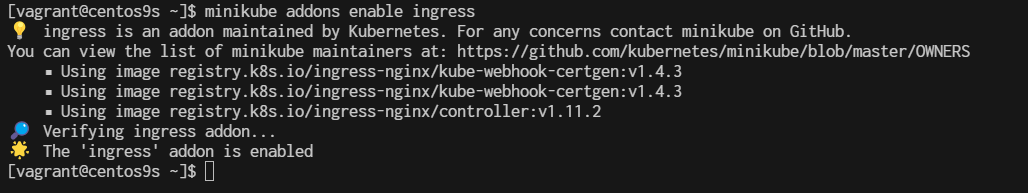

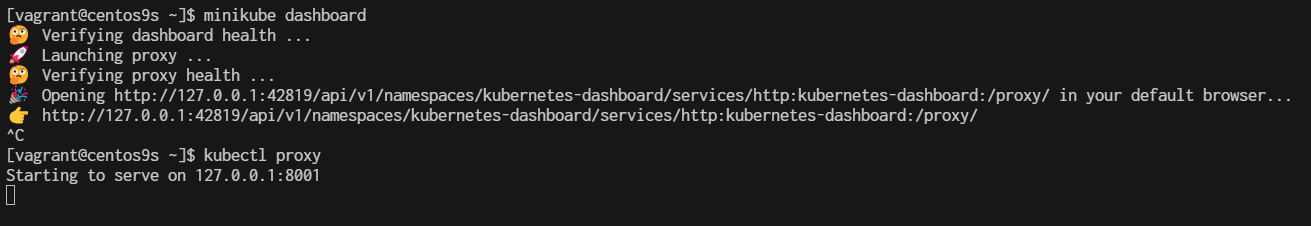

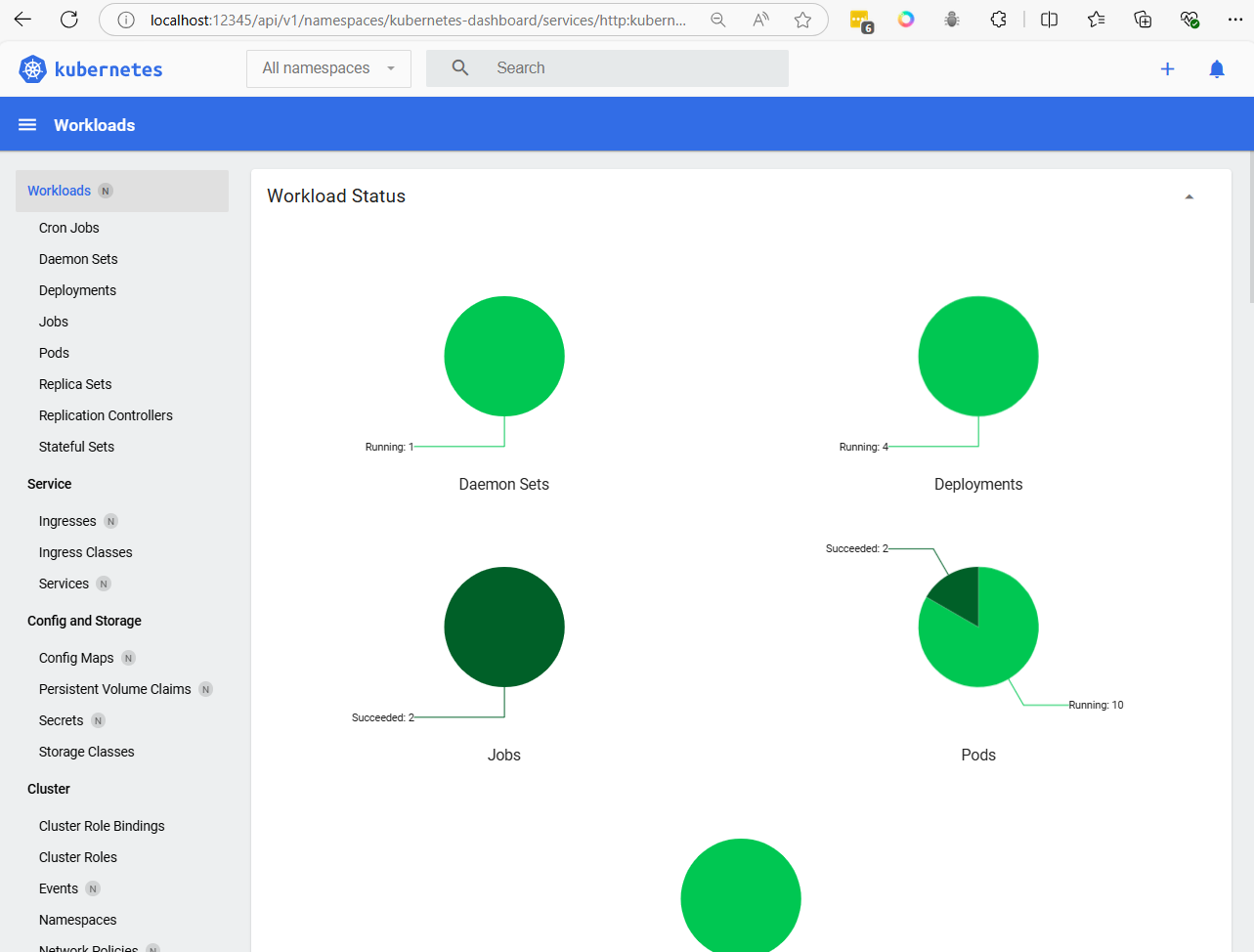

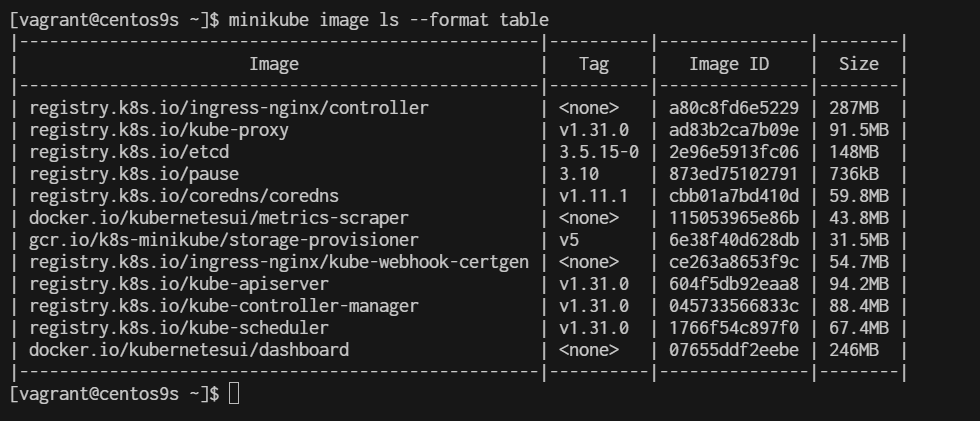

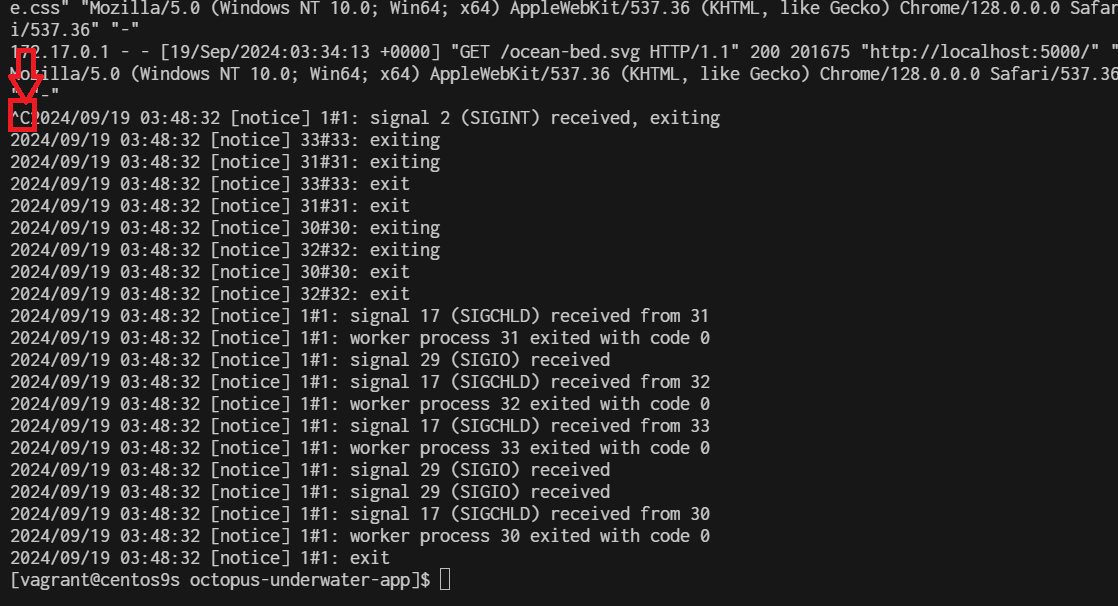

install minikube

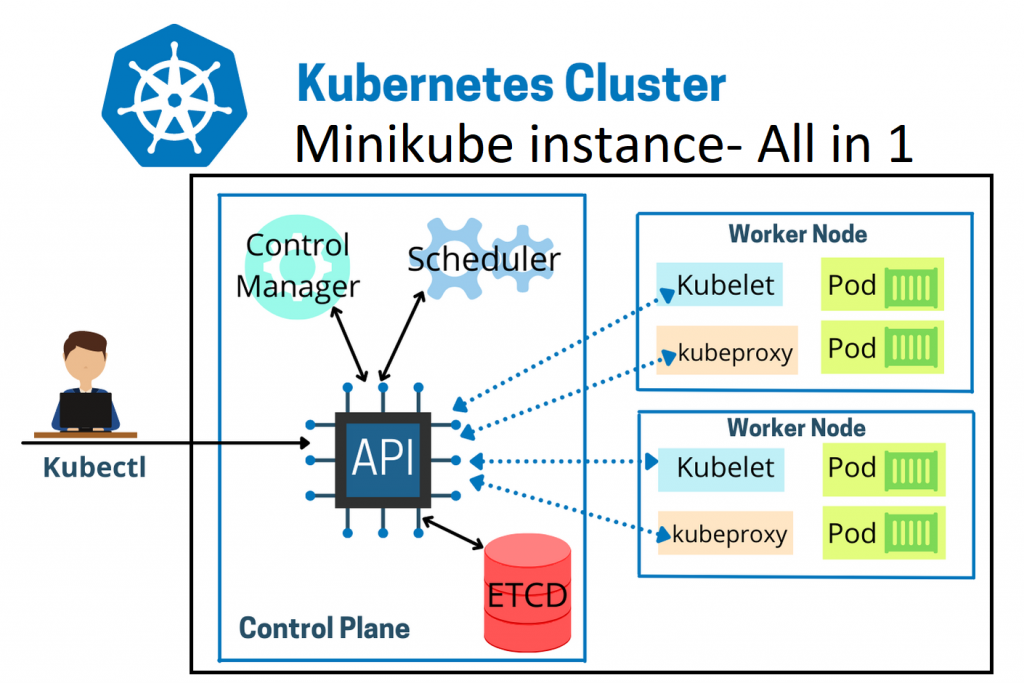

What is Minikube? Minikube is a tool that enables developers to run a single-node Kubernetes cluster locally on their machine. It simplifies Kubernetes development and testing by providing an easy-to-use environment that closely mimics a production Kubernetes cluster. With Minikube, developers can quickly prototype, deploy, and debug applications, making it an essential tool for building and testing Kubernetes-based solutions. Its benefits include fast setup, isolation, reproducibility, and the ability to develop and test Kubernetes applications without the need for a full-scale cluster.

Most users of this driver should consider the newer Docker driver, as it is significantly easier to configure and does not require root access. The ’none’ driver is recommended for advanced users only.

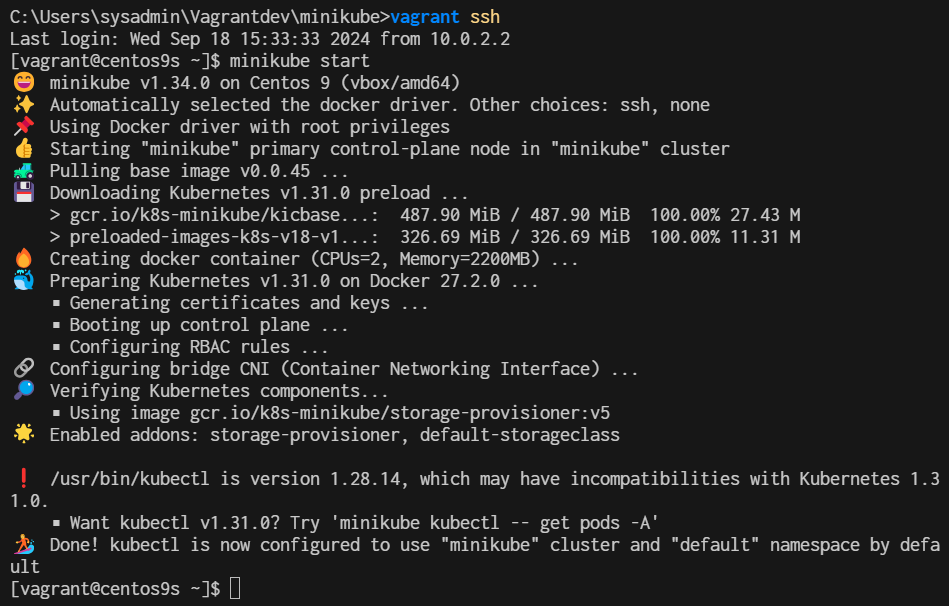

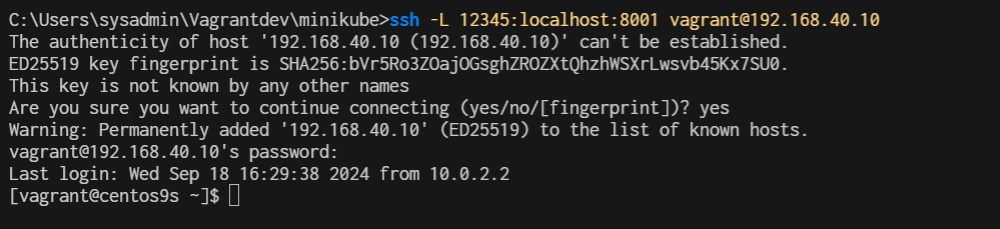

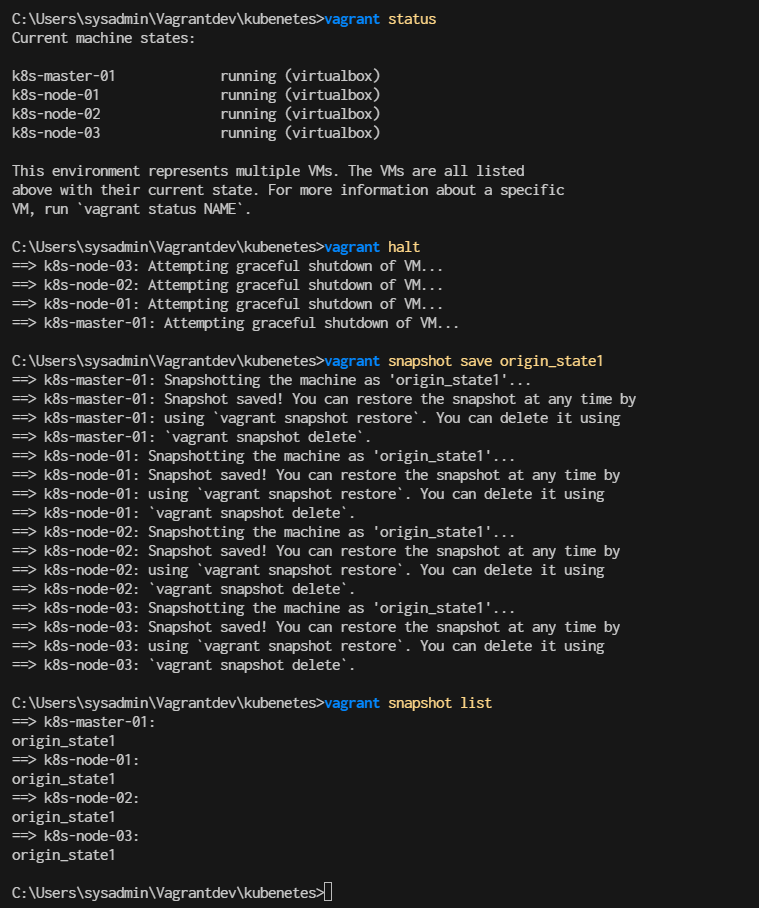

on window create folder for project vm

mkdir minikube

cd minikube

code Vagrantfile

# -*- mode: ruby -*-

# vi: set ft=ruby :

Vagrant.configure("2") do |config|

config.vm.box = "generic/centos9s"

config.vm.network "private_network", ip: "192.168.40.10"

# config.vm.network "public_network"

# config.vm.synced_folder "../data", "/vagrant_data"

#config.vm.synced_folder ".", "/vagrant"

config.vm.provider "virtualbox" do |vb|

vb.memory = "4096"

vb.cpus = 4

end

config.vm.provision "shell", inline: <<-SHELL

echo "\nStep-1 Enable ssh password authentication"

echo $(whoami)

sed -i 's/PasswordAuthentication no/PasswordAuthentication yes/g' /etc/ssh/sshd_config

systemctl restart sshd.service

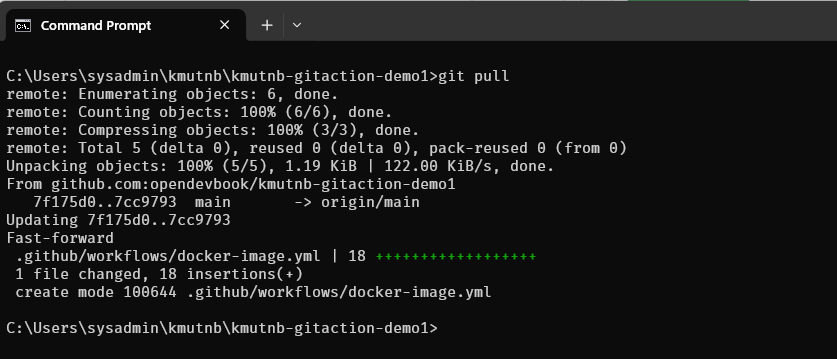

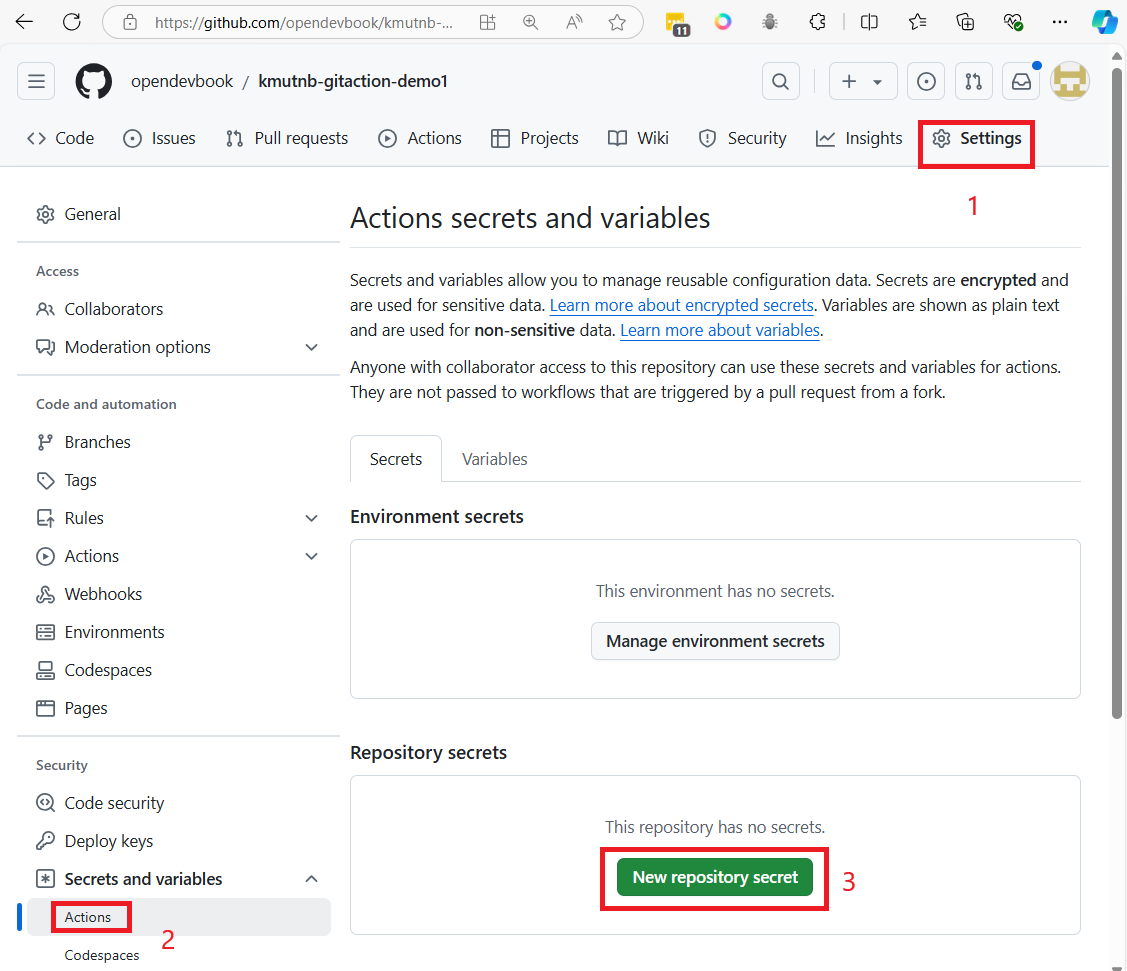

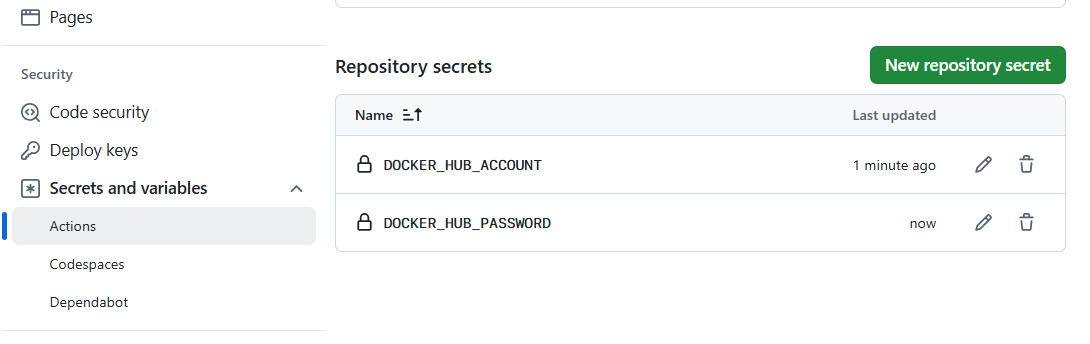

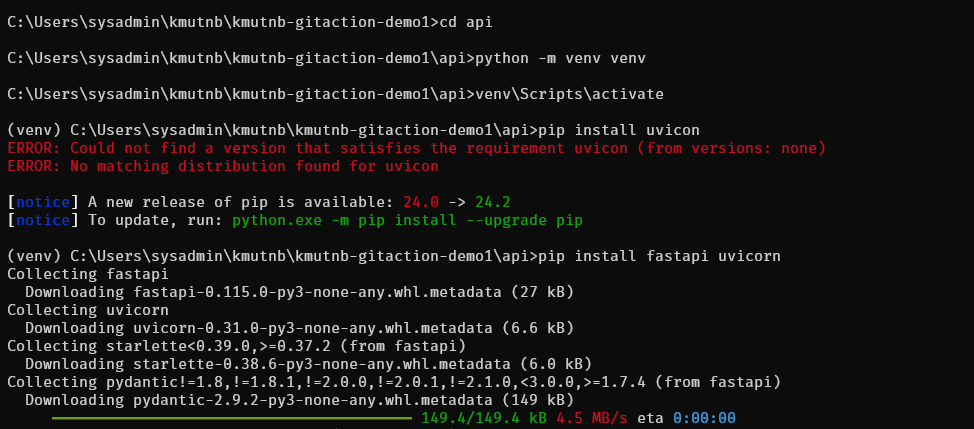

#add docker repository